The cloud revolution has completely reshaped how you design, deploy, and scale applications. Platforms like IaaS and PaaS let you focus on writing great code while leaving infrastructure headaches behind. But as you start building real-time applications from IoT devices and autonomous vehicles to AR/VR, smart cities, and industrial automation, you quickly notice the limits of traditional cloud systems. Latency, bandwidth bottlenecks, and unreliable connectivity can slow you down and affect performance.

That is where Edge-PaaS comes in. It brings the ease of PaaS directly to the edge and lets you run applications closer to the data and users who need it. With Edge-PaaS, you gain speed, responsiveness, and real-time control without losing the simplicity of cloud-native development. In this article, you will discover what Edge-PaaS is, why it matters, the challenges you will face, real-world use cases, its benefits, and how it is shaping the future of cloud computing.

1. Background: From Cloud to Edge to Edge-PaaS

- You start with the cloud, where large data centers handle most of the computing and storage for your applications. As technology grows, you move closer to the edge, where processing happens near users and devices. This shift reduces latency and improves real-time performance. With Edge-PaaS, you combine the flexibility of the cloud with the speed of edge computing. It helps you deploy and manage apps closer to where data is created, giving faster responses and a better user experience overall.

1.1 Cloud, IaaS, PaaS — a quick recap

a. IaaS

- With IaaS, you access virtual servers, storage, and networks through the cloud, which lets you build and control your own digital environment. You handle the operating system, middleware, and runtime, giving you strong control over how things work. Unlike PaaS or SaaS, this model gives you more flexibility but also more responsibility. Providers such as AWS, Google Cloud, and IBM supply these core resources. It becomes easy to scale your setup when your project grows. Startups often choose IaaS because it saves hardware costs while offering full freedom to manage and customize everything.

b. PaaS

- With PaaS, you focus only on building and running your applications while the platform manages everything behind the scenes. It takes care of runtime, middleware, and scaling, so you do not need to worry about infrastructure setup. You simply deploy your code, and the platform does the rest. Services like Azure App Service and Google App Engine help you develop faster with built-in tools. This model saves you time and reduces complexity. However, you might have less control over the environment, which can limit deep customization when compared to IaaS solutions.

c. SaaS

- With SaaS, you use fully managed applications through the cloud without worrying about setup or maintenance. Everything from updates to security is handled by the provider. You simply log in and start working. Tools like Gmail, Microsoft 365, and Salesforce are common examples that you might already use every day. This model is very user-friendly because you do not manage servers or software installations. It helps you save time and focus on tasks instead of technical work. While developers may find it less customizable, it is perfect for end users who need ready-to-use solutions.

- Traditional PaaS works best with centralized cloud data centers that manage everything from one place. It expects a stable internet connection with high bandwidth and reliable speed for smooth performance. You depend on this steady connection to run your applications efficiently. The system also counts on predictable latency, meaning responses reach users quickly and consistently. All infrastructure tasks are handled centrally, so you do not control much of the setup. This model performs well when networks are strong and connections remain stable.

1.2 Why edge computing?

- You use edge computing to bring data processing and storage closer to where the data is created. It helps you get faster responses and reduces the delay caused by sending information to distant servers. By keeping tasks near the edge, your apps work more smoothly, especially in real-time systems. This approach also saves bandwidth and improves privacy since data stays local. You can handle tasks even with weak connections, making edge computing powerful for modern devices and smart applications.

a. Lower latency

- With edge computing, your data does not have to travel far to be processed. This reduces the round-trip time and makes responses almost instant. You notice smoother performance in real-time applications like online games, video calls, or smart devices. Faster response times mean better user experience and fewer delays. Even in critical systems like autonomous vehicles or health monitoring, low latency ensures actions happen quickly. By processing closer to the edge, you avoid waiting for distant cloud servers.

b. Bandwidth savings

- You save bandwidth by processing, filtering, or aggregating data at the edge before sending it to the cloud. This reduces the amount of information transmitted over the network. Your network becomes less congested, and communication is faster. Costs decrease because less internet capacity is used. It also improves efficiency for applications that generate large amounts of data, like IoT devices or video streams. By handling preliminary tasks locally, you only send what truly matters to central servers.

c. Resilience and autonomy

- Edge systems let your applications continue working even if the connection to the central cloud is lost or weak. You do not have to worry about interruptions when networks fail. Critical tasks can run independently at the edge, keeping operations stable. This makes your system more reliable and reduces downtime. You can manage devices and processes locally, which is crucial in remote areas or during emergencies. The autonomy of edge computing ensures that your services remain available no matter the network conditions.

d. Regulatory / data sovereignty

- Some data must remain within a specific location due to laws or privacy rules. Edge computing helps you comply with regulations by keeping data local. You do not need to send sensitive information to faraway cloud servers. This is important for industries like healthcare, finance, or government services. Local processing protects user privacy and reduces legal risks. You can still benefit from modern computing while respecting data sovereignty rules. Edge systems make it easier to balance performance and compliance.

e. Scalability in distribution

- By spreading computation across many edge nodes, you avoid bottlenecks and single points of failure. Your applications can handle more users and devices without slowing down. You gain flexibility because adding more edge nodes increases capacity easily. The system becomes more robust, and failures in one node do not affect the entire network. Distributed computing at the edge ensures smooth performance even as demand grows. You can scale your infrastructure efficiently while maintaining reliability and speed.

- Edge computing comes in many forms, from simple device-level processing to fog computing, which acts as a bridge between the edge and the cloud. You also see Mobile or Multi-access Edge Computing (MEC) in telecom networks that bring computing closer to users. Managing raw edge computing is challenging because of heterogeneous devices, coordinating tasks across many nodes, and handling deployment and updates. You must also consider resource limits and ensure smooth integration with central cloud systems. Despite the complexity, it improves performance and responsiveness.

1.3 Enter Edge-PaaS

- Edge-PaaS brings the principles of PaaS to edge computing, letting you write, deploy, and manage applications without worrying about the underlying infrastructure. The platform handles orchestration, device heterogeneity, and workload migration automatically. You continue programming in a cloud-native style, while Edge-PaaS distributes tasks to edge nodes when needed for faster responses. It solves the “last mile” challenge by providing low-latency, real-time services that stay developer-friendly. You can think of it as a hybrid PaaS that spans both cloud and edge resources, combining centralized computing power with distributed processing close to users for efficiency.

2. Why Edge-PaaS Matters: Drivers & Trends

- Edge-PaaS matters because it helps you build and run applications faster and closer to where data is created. Growing demand for real-time services pushes computing to the edge, making low-latency performance essential. You also face increasing data volumes and bandwidth challenges, which Edge-PaaS can handle efficiently. Emerging IoT devices, smart applications, and telecom innovations drive its adoption. Developers want the simplicity of cloud-native programming without managing complex edge infrastructure. These trends make Edge-PaaS a key solution for combining speed, scalability, and developer-friendliness in modern distributed systems.

2.1 Real-time, latency-sensitive workloads

- Some applications like autonomous vehicles, industrial robots, AR/VR, and real-time games need decisions in milliseconds or microseconds. You cannot rely on traditional cloud servers because unpredictable network latency can cause delays. With Edge-PaaS, critical logic runs close to the data source, while still syncing with the cloud when needed. This makes responses faster, safer, and more reliable. You can build smart factories, remote surgeries, or smart grids that work in real time. Edge-PaaS ensures your applications feel instantaneous and perform well even under pressure.

2.2 Proliferation of IoT and data deluge

- The number of IoT devices is growing rapidly, producing massive data volumes, much of it temporary. Sending all raw data to the cloud is costly, slow, and often unnecessary. You can use edge-first processing for filtering, aggregation, or anomaly detection. Edge-PaaS lets you split application logic into edge-side and cloud-side modules. This reduces network load and speeds up decision-making. You handle only meaningful data centrally while keeping devices responsive locally. Edge-PaaS makes managing IoT systems easier and more efficient.

2.3 Disconnected or intermittent connectivity zones

- In places like mines, offshore sites, rural areas, disaster zones, or ships, network connectivity is unreliable or expensive. Your systems must keep working even when cloud access is limited. Edge-PaaS lets applications function locally and sync with central cloud modules when possible. You maintain reliability and performance even in remote or challenging environments. By processing tasks close to the edge, your operations remain smooth, and downtime is minimized. This is crucial for mission-critical systems and services that cannot afford interruptions.

2.4 Cost reduction and network efficiency

- Processing data locally helps you save on bandwidth costs and reduces strain on network backbones. You only send essential results to the cloud, not every piece of raw data. This lowers costs, improves network efficiency, and avoids congestion. Edge-PaaS handles distribution automatically, so your system runs efficiently without extra effort. You get faster responses, smarter resource use, and reduced operational expenses. Local processing combined with cloud aggregation gives the best of both worlds, keeping your applications responsive and affordable.

2.5 Regulatory & privacy constraints

- Some industries like healthcare, finance, and telecommunications require certain data to remain local due to privacy laws or compliance rules. Sending raw data to distant clouds may violate regulations. Edge-PaaS allows local processing while still supporting global aggregation when needed. You can follow regulatory and data sovereignty requirements without slowing down applications. This keeps sensitive information secure and compliant. By processing at the edge, you respect privacy rules while still benefiting from cloud resources for analytics or long-term storage.

2.6 Developer productivity and abstraction

- Edge computing is powerful but complex. You must handle heterogeneous devices, fragmented networks, deployment, monitoring, and orchestration. Edge-PaaS abstracts these challenges, letting you focus on business logic instead of low-level infrastructure. You can program as if you are on a single platform while the system manages multiple nodes automatically. This improves developer productivity and reduces errors. Your applications can scale and adapt without extra manual work. Edge-PaaS makes building distributed, low-latency systems simpler and accessible, even if the underlying architecture is complicated.

2.7 Hybrid cloud-edge synergy

- Edge-PaaS does not replace the cloud; it complements it. The cloud handles heavy analytics, AI training, and long-term storage, while edge nodes take care of real-time inference and responsive logic. You can create a seamless pipeline from edge to cloud in a single platform. Edge-PaaS integrates distributed computing with centralized resources, making your applications faster, more reliable, and easier to manage. You get the best of both worlds: local performance and global capabilities, all while keeping development simple and consistent.

3. Architectural Dimensions & Challenges

- To make Edge-PaaS work effectively, you must consider several architectural challenges and design choices. It needs to manage distributed edge nodes, coordinate workloads, and handle heterogeneous devices seamlessly. You also face issues like deployment, updates, scalability, and integration with the cloud. Finding solutions for these complexities ensures your applications remain reliable, low-latency, and easy to develop. By addressing these key dimensions, Edge-PaaS can deliver the benefits of both edge computing and cloud-native platforms, making your development experience smoother and more efficient.

3.1 Deployment & orchestration

- Deployment and orchestration in Edge-PaaS are challenging because edge nodes are spread across different locations and have varied hardware, memory, compute, network, and operating systems. You need support for containers or lightweight virtualization like Docker or microVMs to run applications consistently. The platform handles orchestration, managing scheduling, load balancing, and failover across all nodes. Workloads can move dynamically between the cloud and edge depending on network conditions or resource availability. For example, a smart traffic system can process video feeds at nearby edge nodes for real-time decisions while sending summary data to the cloud for long-term analytics. Platforms like ECHO demonstrate dynamic migration of tasks and dataflows across edge, fog, and cloud layers to ensure resilience and efficiency.

3.2 Partitioning of workloads

- Partitioning of workloads in Edge-PaaS decides which parts of an application run on the edge and which run in the cloud. You can split tasks like streaming, microservices, inference, or control loops based on rules, policies, or runtime metrics. The system needs to be context-aware, considering latency, load, and connectivity to make smart decisions. For example, in a smart factory, sensor data can be processed at nearby edge nodes for real-time alerts, while historical data is sent to the cloud for analytics and long-term planning. This ensures fast responses locally while still benefiting from cloud-scale computation and storage. Partitioning improves efficiency, reliability, and overall performance of your applications.

3.3 State management & data consistency

- State management and data consistency are critical when your applications run partly on edge nodes and partly in the cloud. You need to keep state, data, and synchronization consistent across all locations. Strategies include eventual consistency, conflict-free replicated data types (CRDTs), state synchronization pipelines, and delta-based updates. For example, a connected fleet of drones may make real-time decisions at the edge, like avoiding obstacles, while the cloud tracks overall fleet positions and mission data. Edge-PaaS ensures that updates from each drone synchronize correctly with the central system, preventing conflicts and keeping data accurate. This balance allows fast local responses while maintaining global consistency across all nodes.

3.4 Monitoring, logging & observability

- Monitoring, logging, and observability are essential in Edge-PaaS because your applications run across many edge nodes and the cloud. You need to track metrics, logs, perform tracing, run health checks, and enable distributed debugging. This helps you detect issues quickly and maintain performance. For example, in a smart city, traffic sensors, cameras, and streetlights generate data at the edge while central systems manage analytics. Edge-PaaS collects logs and metrics from all devices, so you can see which node is slow or failing. You can debug problems, ensure smooth operation, and optimize resources across both edge and cloud components. Observability makes your system reliable and transparent.

3.5 Security, authentication & isolation

- Security, authentication, and isolation are critical in Edge-PaaS because edge nodes can be physically exposed and more vulnerable to tampering or attacks. You need secure boot, trusted execution, and attestation to verify that devices run authentic software. Encryption protects data both in transit and at rest. Strong identity, access control, and authentication across all nodes ensure only authorized users and services interact with the system. For example, in a connected hospital, patient monitoring devices at the edge collect sensitive health data. Edge-PaaS ensures that this data is encrypted, workloads are isolated, and only authorized staff can access the information, keeping systems secure and compliant.

3.6 Resource constraints & heterogeneity

- Resource constraints and heterogeneity are common challenges in Edge-PaaS because edge nodes often have limited CPU, memory, and storage compared to central cloud servers. The platform must be lightweight and adaptable to different hardware. You can use an optimized runtime to run applications efficiently and minimize memory footprint. Efficient resource usage ensures that multiple tasks can run simultaneously without overloading the node. For example, a smart traffic system may process video streams at small roadside edge devices. Edge-PaaS can perform adaptive load shedding by reducing non-critical tasks when resources are low, keeping important processing running smoothly, and ensuring reliable performance under constraints.

3.7 Upgrades and rolling updates

- Upgrades and rolling updates are important in Edge-PaaS because your applications run across many distributed edge nodes, often with unreliable or intermittent connectivity. You need robust mechanisms like canary deployments, incremental updates, and rollback strategies to avoid disrupting services. For example, in a smart energy grid, edge devices control local power distribution while syncing with the cloud. When updating software, Edge-PaaS can deploy changes gradually to a few nodes first, monitor performance, and then roll out updates to all nodes. If problems appear, the system can roll back safely. This approach ensures that your applications stay reliable, consistent, and continuously available during upgrades.

3.8 Networking & connectivity management

- Networking and connectivity management are crucial in Edge-PaaS because edge nodes connect through different types of networks, like wireless, 5G, or wired connections, which can be unstable. The platform must handle heterogeneous networks and ensure seamless communication. You may use overlay networks, service discovery, intelligent routing, caching, and fallback paths to maintain connectivity. For example, a fleet of delivery drones relies on edge nodes for real-time navigation. Edge-PaaS manages network variability, ensuring drones receive instructions even if some connections drop. This guarantees reliability, smooth data flow, and consistent operation across distributed nodes, allowing your applications to function efficiently under changing network conditions.

3.9 Developer model, API, and abstractions

- Developer model, API, and abstractions in Edge-PaaS focus on making your life easier as a developer. The API should be simple, cloud-native, and consistent with tools you already know. You can deploy a function, container, or microservice, specify placement constraints, and define dependencies without worrying about low-level details. For example, in a smart retail store, you can deploy an edge service that processes video from cameras for customer analytics. Edge-PaaS automatically decides which edge nodes handle the workload and how it communicates with the cloud. You focus on writing the application, while the platform manages distribution, scaling, and orchestration across all nodes. This makes development faster, safer, and more reliable.

4. Use Cases & Scenarios

- Edge-PaaS plays a big role in changing how technology works in real life. You can use it in many industries such as healthcare, manufacturing, transportation, and smart cities. It helps you run real-time applications closer to where data is created, improving speed and reliability. Some systems depend on instant decisions, like self-driving cars or factory robots. Others need to process local data safely and efficiently. With Edge-PaaS, you make systems smarter, faster, and more connected without handling complex technical setups.

4.1 Smart manufacturing / Industry 4.0

- In smart manufacturing, you deal with machines and sensors that create huge amounts of data every second. You need real-time control to keep everything running smoothly, especially for robots or quality inspection systems. With an Edge-PaaS like Azure IoT Edge, you can deploy AI models directly on factory devices to detect faults instantly. If a machine vibrates abnormally, the system reacts immediately without waiting for cloud instructions. Later, data summaries go to the cloud for deeper analytics. This mix of local speed and central intelligence helps you improve safety, reduce downtime, and boost production efficiency. Edge-PaaS makes it easy to manage both factory floor operations and cloud-based insights at once.

4.2 Autonomous vehicles and drones

- When you think about autonomous vehicles and drones, they depend on real-time decision-making for safety and navigation. You cannot always rely on the cloud because delays could cause accidents. Edge-PaaS helps by running critical logic on local computers inside the vehicle, while less urgent tasks like route learning or data analytics are handled in the cloud. For example, Microsoft Azure IoT Edge lets you deploy AI models directly on a car’s onboard system. It processes sensor data instantly and syncs updates with the cloud later. This hybrid setup keeps driving smoothly, fast, and secure, even when the internet connection drops or weakens.

4.3 AR/VR and gaming

- AR/VR and cloud gaming need very fast responses to feel smooth and realistic. If there is too much delay, the experience becomes frustrating. With Edge-PaaS, you can run parts of the game or VR experience near the player instead of in a distant cloud. This reduces lag and makes everything feel more natural. For example, NVIDIA CloudXR, built on NVIDIA’s edge platform, lets you stream high-quality VR and AR experiences from nearby edge servers. It handles rendering and data processing locally, then streams visuals to your headset in real time. You get immersive gameplay while the platform manages latency, bandwidth, and scaling automatically, giving you a fast and enjoyable experience.

4.4 Smart cities and edge analytics

- In a smart city, thousands of sensors and cameras collect data about traffic, pollution, and safety. You can use Edge-PaaS platforms like Azure IoT Edge to process this data locally. For example, a traffic camera can use AI models at the edge to detect accidents or congestion in real time. Only the summary data, like traffic flow statistics, is sent to the central cloud. This approach saves bandwidth and gives instant responses. You can quickly deploy or update apps across all city nodes using Edge-PaaS, making city operations faster, smarter, and more reliable without managing each device separately.

4.5 Healthcare and telemedicine

- In healthcare and telemedicine, you depend on fast and secure data handling to save lives. Imagine a remote patient monitoring system that tracks a person’s heartbeat and oxygen levels through connected medical devices. With Edge-PaaS, this data can be processed instantly at a nearby edge node, allowing doctors to react quickly during emergencies. A good example is Microsoft Azure IoT Edge, which helps hospitals process data locally while sending only important summaries to the cloud. Sensitive medical data stays secure under privacy laws, while anonymized information supports cloud analytics for better diagnosis and long-term research. You gain both speed and safety in patient care.

4.6 Retail & point-of-sale

- In retail and point-of-sale, Edge-PaaS helps you deliver fast and smooth shopping experiences. You can process real-time inventory updates and customer recommendations directly in the store. For example, using AWS Greengrass, a store can run local analytics on sales trends and stock levels without waiting for cloud responses. The system syncs later with the main cloud when the connection is stable. You can also use AR-based shopping assistants that suggest products instantly. This approach keeps your store responsive, reduces downtime, and ensures customers always get accurate information during checkout or product searches, even when connectivity is limited.

4.7 Energy, utilities & oil & gas

- In energy, utilities, and oil and gas, Edge-PaaS helps you manage systems that work in distant and harsh locations. Imagine you are running a wind farm where each turbine uses sensors to track wind speed, vibration, and power output. An Edge-PaaS platform like Microsoft Azure IoT Edge lets you process this data locally to detect faults instantly and adjust blade angles in real time. The cloud still collects data for long-term analytics and maintenance planning. This approach saves bandwidth, ensures continuous operation, and avoids delays caused by unstable network connections. You can make quick, smart decisions even when offline, keeping energy production efficient and reliable across every site.

4.8 Telecom / MEC

- In telecom networks, Edge-PaaS helps you run applications close to users through Multi-access Edge Computing (MEC). You can imagine watching a VR concert or playing a cloud game with almost no delay because the data is processed at a nearby base station instead of a faraway cloud. A real example is AWS Wavelength, which works with telecom companies like Verizon to host apps at 5G edge locations. This setup reduces latency, improves speed, and allows developers to build smart services like real-time analytics, AR navigation, or video streaming directly near the user.

5. Benefits & Key Advantages

- With Edge-PaaS, you experience the best of both cloud and edge computing in one platform. It gives you the flexibility of cloud-based development while delivering the speed and low latency of local edge systems. You can manage apps easily without worrying about infrastructure details. This setup improves reliability, supports real-time processing, and allows smarter resource usage. Developers benefit from seamless scaling and simpler maintenance. Together, these advantages help you create faster, safer, and more efficient digital services for modern users.

5.1 Low latency and high responsiveness

- With Edge-PaaS, you experience lightning-fast responses because processing happens close to where data is created. For example, in autonomous vehicles, milliseconds matter when detecting obstacles. Using platforms like AWS Wavelength, you can run applications near 5G networks, ensuring real-time decisions. The data does not need to travel to a faraway cloud, which drastically cuts the delay. You gain the ability to build apps that respond instantly, making technologies like VR streaming and industrial automation smooth and reliable even in demanding conditions.

5.2 Simplified developer experience

- You can use familiar cloud-native tools such as containers, APIs, or serverless functions while the platform manages the edge complexity. For instance, Azure IoT Edge lets you deploy and update apps on remote devices through the same interface you use for the cloud. This makes development faster and more intuitive. You do not have to worry about node heterogeneity or communication issues. The platform’s automation handles deployment, scaling, and updates, freeing you to focus purely on application logic and user experience rather than infrastructure headaches.

5.3 Efficiency and cost savings

- Edge-PaaS reduces costs by processing data locally and sending only what matters to the cloud. Imagine you are running smart surveillance cameras using Google Distributed Cloud Edge. Instead of streaming every frame to the cloud, only analyzed or suspicious clips are uploaded. This approach saves network bandwidth and lowers cloud storage fees. It also reduces latency because decisions happen right on-site. Over time, these savings multiply, helping you manage operations efficiently without sacrificing performance or accuracy in real-world applications.

5.4 Fault tolerance and autonomy

- With Edge-PaaS, your systems stay functional even when disconnected from the central cloud. Suppose you are managing a smart factory using Red Hat OpenShift Edge. If the internet link drops, the edge nodes still run local operations, controlling machines and collecting data. Once the connection is restored, the system syncs automatically. This autonomy ensures continuous work without interruptions. You gain greater reliability, which is vital for industries that cannot afford downtime, such as energy grids, manufacturing plants, or transportation networks.

5.5 Data locality and compliance

- Some industries must follow strict privacy and data protection rules. Using Edge-PaaS, you can keep sensitive information close to its source while still using cloud capabilities. For example, healthcare apps built on IBM Edge Application Manager can process patient data in local hospitals while sending only aggregated insights to the cloud. This helps you follow regulations like GDPR while maintaining efficiency. You protect data privacy while still benefiting from scalable cloud analytics and secure coordination between multiple edge sites.

5.6 Scalability and distribution

- Edge-PaaS allows applications to scale naturally across distributed nodes. You can handle growing workloads by adding more edge devices instead of expanding a central data center. Take NVIDIA Fleet Command, for example. It helps you manage and scale AI workloads across multiple edge locations. If one site experiences more demand, new containers are deployed automatically there. This distributed approach prevents performance bottlenecks and ensures every user gets quick, reliable service no matter where they are located geographically.

5.7 Hybrid orchestration & intelligence

- Edge-PaaS platforms intelligently balance workloads between edge and cloud environments. For instance, Google Anthos analyzes resource availability, latency, and connection quality to decide where to run specific tasks. You can process data locally when the network is weak or shift workloads to the cloud when more computing power is needed. This dynamic orchestration improves efficiency and responsiveness. The system keeps learning and optimizing continuously, so you get the best possible performance while using resources effectively across both layers.

5.8 Unified management

- Managing edge and cloud systems separately can be complex, but Edge-PaaS simplifies everything under one control panel. Platforms like VMware Edge Cloud Orchestrator give you unified visibility for deployment, monitoring, and updates. You can track edge nodes, check performance metrics, and roll out upgrades easily from one dashboard. This central view makes your job much simpler and reduces human errors. You spend less time managing infrastructure and more time improving application functionality, ensuring consistent operations across all environments.

6. Challenges and Possible Pitfalls

- When you explore Edge-PaaS, you quickly realize it brings both opportunity and complexity. The idea sounds powerful, but building and maintaining it is not simple. You face issues like security, data consistency, and scaling across diverse edge nodes. Each site may have different resources and network quality. You must also deal with integration between edge and cloud systems. Despite these difficulties, strong design principles and better automation can help you manage these challenges and unlock Edge-PaaS’s true potential.

6.1 Heterogeneity and fragmentation

- When you work with Edge-PaaS, one big challenge is the wide variety of hardware architectures, such as ARM, x86, and GPUs. Each device may also run a different operating system and use unique network settings. For example, Google Anthos helps unify edge and cloud environments by providing a consistent platform across devices. Still, performance tuning is tough because not every node supports the same libraries or acceleration features. You need smart orchestration tools that automatically adapt to these differences so your applications run smoothly regardless of the hardware mix at the edge.

6.2 Resource constraints

- Edge devices often have limited CPU, memory, and storage, making it difficult to run heavy workloads. You must design your applications to be lightweight and efficient. For instance, AWS IoT Greengrass allows you to deploy serverless functions that run locally on devices with minimal resources. This helps you process data close to its source without overwhelming the system. The platform manages what runs where, ensuring only the most essential computations occur locally while larger processing tasks move to the cloud. Efficient use of every resource ensures smoother operation and longer device life.

6.3 Connectivity volatility

- Network stability at the edge cannot be taken for granted. Some nodes may lose connection, face bandwidth drops, or experience high latency. A good example is Azure IoT Edge, which supports offline functionality for industrial devices. You can keep critical processes running locally even when disconnected from the internet. Once the connection returns, the platform automatically syncs data and updates. This resilience allows your applications to remain reliable in remote or mobile environments like offshore rigs or moving vehicles. Maintaining graceful degradation ensures your services never completely stop working under unstable connectivity.

6.4 State synchronization

- Keeping data consistent between edge and cloud is a complex task. Different nodes may process events at varying speeds or face temporary disconnections. Using platforms like Red Hat OpenShift Edge, you can set up synchronization pipelines to update only changed data instead of resending everything. This is especially important in smart manufacturing, where local machines track sensor states that must align with central dashboards. Techniques like eventual consistency and delta-based updates help maintain coherence. Proper state management ensures your system behaves predictably even when partial data delays occur.

6.5 Security risks

- Security becomes a major concern because edge devices are often located in unprotected environments. They are more exposed to tampering, malware, or unauthorized access. For example, IBM Edge Application Manager uses trusted execution environments and secure boot to verify devices before deployment. You can encrypt both data at rest and in transit to prevent interception. Identity management ensures only approved nodes communicate with the platform. By building layered protection, you strengthen trust between distributed components. Edge-PaaS must always combine hardware-level security with software-based monitoring to keep your system safe.

6.6 Tooling and debugging

- When your application runs across thousands of distributed nodes, debugging and monitoring become complicated. Logs and metrics come from different places, making it hard to pinpoint issues. Platforms like Datadog Edge Observability integrate with Kubernetes-based Edge-PaaS environments to collect telemetry from every node in real time. You can visualize performance data, identify failures, and trace requests across cloud and edge layers. With such observability tools, you spend less time guessing and more time solving. Clear visibility helps you keep applications reliable and responsive across large, dispersed deployments.

6.7 Deployment overhead

- Rolling out updates across many nodes is not easy. Each device may run a different software version or connect at unpredictable times. Using Google Anthos Config Management, you can perform controlled rollouts with canary updates, monitor performance, and roll back changes if problems arise. This prevents downtime while keeping systems consistent. Managing thousands of edge sites requires automation and intelligent scheduling. The goal is to ensure every node stays up to date without overloading networks or interrupting service. A strong deployment pipeline ensures reliability and smooth user experiences.

6.8 Business model and economics

- Operating an Edge-PaaS platform requires sustainable business strategies. Costs can rise with infrastructure management, bandwidth, and maintenance. Companies like Equinix Metal provide edge infrastructure as a service, while Cloudflare Workers allow developers to deploy applications close to users using a pay-as-you-go model. You must balance investment and pricing to ensure profitability. Building partnerships between telecoms, cloud providers, and enterprises helps reduce costs. Finding who owns and manages the edge layer remains crucial for scaling economically while maintaining accessibility and fairness for developers and users.

6.9 Vendor lock-in risk

- When you depend on a single proprietary provider, switching becomes difficult. Many developers worry about being trapped in a closed ecosystem. Platforms like K3s or OpenShift use open standards and Kubernetes compatibility to minimize lock-in. You can migrate workloads across clouds or on-premises setups easily. This flexibility protects your investment and gives you freedom of choice. By adopting open APIs and portable architectures, you ensure long-term independence. In the fast-changing edge computing world, maintaining control over your platform choices helps you adapt faster and stay competitive.

7. Real-World and Research Precedents

- You can already see Edge-PaaS taking shape in both research and industry. Several projects explore how to blend cloud-native tools with edge infrastructure. Academic work like ECHO and FogBus2 shows that seamless workload migration is possible. Meanwhile, companies such as AWS, Microsoft, and Google are testing real systems that bring PaaS closer to users. These early experiments prove that Edge-PaaS is not just a theory but a practical step toward faster, smarter, and more connected application ecosystems.

7.1 ECHO: Hybrid Orchestration Platform

- You can use ECHO to manage dataflows across edge, fog, and cloud environments in a smart and flexible way. It helps you schedule components dynamically, so computations run where they are fastest and most efficient. Moving tasks between layers can be challenging because you need to keep latency low and performance high at all times. In real-life situations like smart cities, autonomous vehicles, or live analytics, ECHO makes systems more responsive and reliable. Unlike traditional cloud-only PaaS, it decides the best location for each task based on current conditions. By using this platform, you always know your applications are running at the optimal location, saving resources and improving user experience.

7.2 IoT Hybrid Cloud/Fog PaaS Architectures

- When you work with IoT applications, hybrid cloud/fog PaaS makes deployment simpler and faster. It allows you to automate provisioning of components across multiple layers without manually managing infrastructure. You can reduce latency by keeping critical tasks close to devices while using the cloud for heavy computation. Industries like smart manufacturing, connected cars, and smart homes benefit a lot. These platforms handle scaling, load balancing, and resource allocation automatically. By using hybrid PaaS, you focus on building your application instead of worrying about servers. Your devices stay responsive, and your system remains efficient even under heavy workloads.

7.3 Context-Aware Orchestration

- You can improve system performance using context-aware orchestration in edge, fog, and cloud systems. It decides where computation should run based on network conditions, device load, and latency. Applications like augmented reality, virtual reality, and live analytics rely on this approach. The system automatically adapts to current conditions, moving tasks to the best location. Smart algorithms track real-time context and make decisions that keep services fast and reliable. This prevents delays, system crashes, and poor user experience. Using context-aware orchestration ensures your applications maintain high quality of service, handle heavy loads efficiently, and stay flexible as conditions change.

7.4 Industrial PaaS Offerings

- Some companies are creating industrial PaaS platforms specifically for edge computing. You can see them in industries like telecom, sports analytics, and manufacturing. These platforms are designed to handle real-time workloads and distributed components efficiently. They provide tools to monitor performance, manage tasks, and optimize resources without manual intervention. You don’t need to worry about infrastructure problems, which saves time and effort. Industries benefit because applications remain fast, reliable, and scalable even at the edge. By using industrial PaaS, you can focus on building solutions, innovating in your domain, and ensuring your systems run smoothly under demanding conditions.

8. Design Patterns & Best Practices for Edge-PaaS

- When you design or use Edge-PaaS, following the right patterns makes your system reliable and efficient. You should focus on modular applications, so each part can run independently at the edge or cloud. Event-driven design helps your services react quickly to data changes. You can also apply context-aware orchestration to place workloads where they perform best. Using scalable and resilient components ensures your applications keep running even under failure. These best practices help you build smarter, faster, and more maintainable edge-native systems.

8.1 Microservice + Function Decomposition

- When you build an Edge-PaaS application, breaking it into smaller microservices or serverless functions makes it easier to deploy at both the edge and cloud. For example, Azure IoT Edge lets you run independent modules for sensor data processing, device control, or analytics. Each module can be stateless or maintain a manageable state. You gain flexibility because a failing module doesn’t crash the entire system. You also achieve faster updates, easier debugging, and better scalability. By decomposing applications, you can run workloads close to users, respond faster, and maintain control over distributed resources.

8.2 Latency-Aware Placement Policies

- You can annotate application modules with latency, reliability, and resource constraints so the platform places them where they perform best. For instance, Google Distributed Cloud Edge allows you to run real-time inference close to 5G users while sending heavy analytics to the central cloud. The platform decides which node gets which workload, reducing delays. You improve user experience by keeping critical tasks near the source. This approach ensures responsiveness, avoids overloading slow nodes, and balances performance across edge sites. Proper placement lets your applications respond instantly while using resources efficiently.

8.3 Fallback and Graceful Degradation

- Edge nodes or networks may fail unexpectedly, so your system must degrade gracefully instead of crashing. Using AWS IoT Greengrass, you can implement a local cache or limited-mode operation if connectivity drops. For example, a smart factory can continue controlling machinery even when cloud access is lost. Once the connection is restored, data syncs automatically. You maintain reliability and prevent total service disruption. Users experience minimal interruption because the application adapts to failures. By planning for fallback scenarios, you make your Edge-PaaS applications robust, resilient, and able to handle real-world conditions smoothly.

8.4 State Partitioning / Sharding

- You should split your application state by location to reduce cross-node synchronization. For example, Red Hat OpenShift Edge can shard sensor data for different manufacturing floors, so each edge node manages local readings. This reduces latency and avoids bottlenecks in communication. You can use eventual consistency for parts that do not require strict synchronization. When nodes reconnect, updates propagate automatically. This approach helps you maintain high performance across distributed environments. Partitioning state allows your Edge-PaaS system to scale efficiently while keeping local operations fast and reliable.

8.5 Sync & Merge Protocols

- Maintaining a consistent state across edge and cloud nodes requires CRDTs, vector clocks, or delta updates. Platforms like ECHO use these techniques to merge data when nodes reconnect after outages. Imagine multiple smart traffic sensors updating the same dashboard independently. When connectivity resumes, the system merges updates without conflicts. You get coherent results even with temporary disconnections. This ensures that your applications stay accurate and reliable. By using robust synchronization protocols, your Edge-PaaS system handles distributed data effectively and keeps user-facing results consistent.

8.6 Health Checks & Dynamic Migration

- Your platform must continuously monitor edge node metrics such as CPU, memory, and latency. Azure IoT Edge allows you to migrate workloads dynamically when nodes are overloaded. If a node starts lagging, tasks move to healthier devices automatically. You avoid failures and maintain responsiveness. This approach ensures better resource utilization and protects user experience. By watching system health in real time, you can react to changing conditions immediately. Dynamic migration helps your Edge-PaaS applications remain efficient, reliable, and adaptive even under unpredictable workloads.

8.7 Canary Deployments and Rollbacks

- When updating applications, you should deploy new versions gradually to avoid system-wide failures. Using Google Anthos, you can send a new release to a few edge nodes first. If metrics show issues, you can roll back safely without affecting the entire system. This method reduces downtime and prevents critical failures. You gain confidence in updates and maintain service quality. Canary deployments help your Edge-PaaS system evolve safely while minimizing risk for distributed, latency-sensitive applications.

8.8 Secure Boot & Attestation

- You must ensure that edge nodes run untampered code. Platforms like IBM Edge Application Manager use secure boot and trusted attestation to verify hardware and software integrity. This prevents unauthorized access or tampering at remote locations. You gain trust in the platform, ensuring only verified applications execute. Security is especially important in sensitive environments like healthcare or finance. By combining hardware and software safeguards, your Edge-PaaS applications stay protected, resilient, and compliant with regulations.

8.9 Resource-Aware Scheduling

- Edge nodes have different capacities, so the platform should adapt to CPU, memory, and storage constraints. AWS IoT Greengrass schedules workloads intelligently, scaling or shedding load based on node availability. If a node is overloaded, tasks move elsewhere or degrade gracefully. You maximize performance without crashing devices. Resource-aware scheduling ensures that even limited nodes run efficiently while meeting real-time requirements. This approach helps your Edge-PaaS applications stay responsive, reliable, and optimized across a mix of hardware types.

8.10 Telemetry & Observability

- Collecting metrics, logs, and traces from distributed nodes is critical. Using Datadog Edge Observability, you can monitor health, performance, and errors across all edge and cloud components. Centralized dashboards allow you to trace issues, debug distributed workflows, and maintain visibility. You can detect problems before users notice them. Observability supports better decision-making and faster troubleshooting. By implementing strong telemetry, your Edge-PaaS applications remain transparent, predictable, and easier to maintain across complex distributed deployments.

9. A Sample Application Flow (Illustrative)

- A smart factory shows how Edge-PaaS works in real life. You can imagine sensors, robots, and cameras all sending data for instant decisions. The edge layer handles quick actions like detecting faults or controlling machines in real time. The cloud processes long-term data, builds models, and manages analytics. Together, they form a hybrid system that improves speed and efficiency. You gain both real-time control and data intelligence, showing how Edge-PaaS connects performance with automation seamlessly.

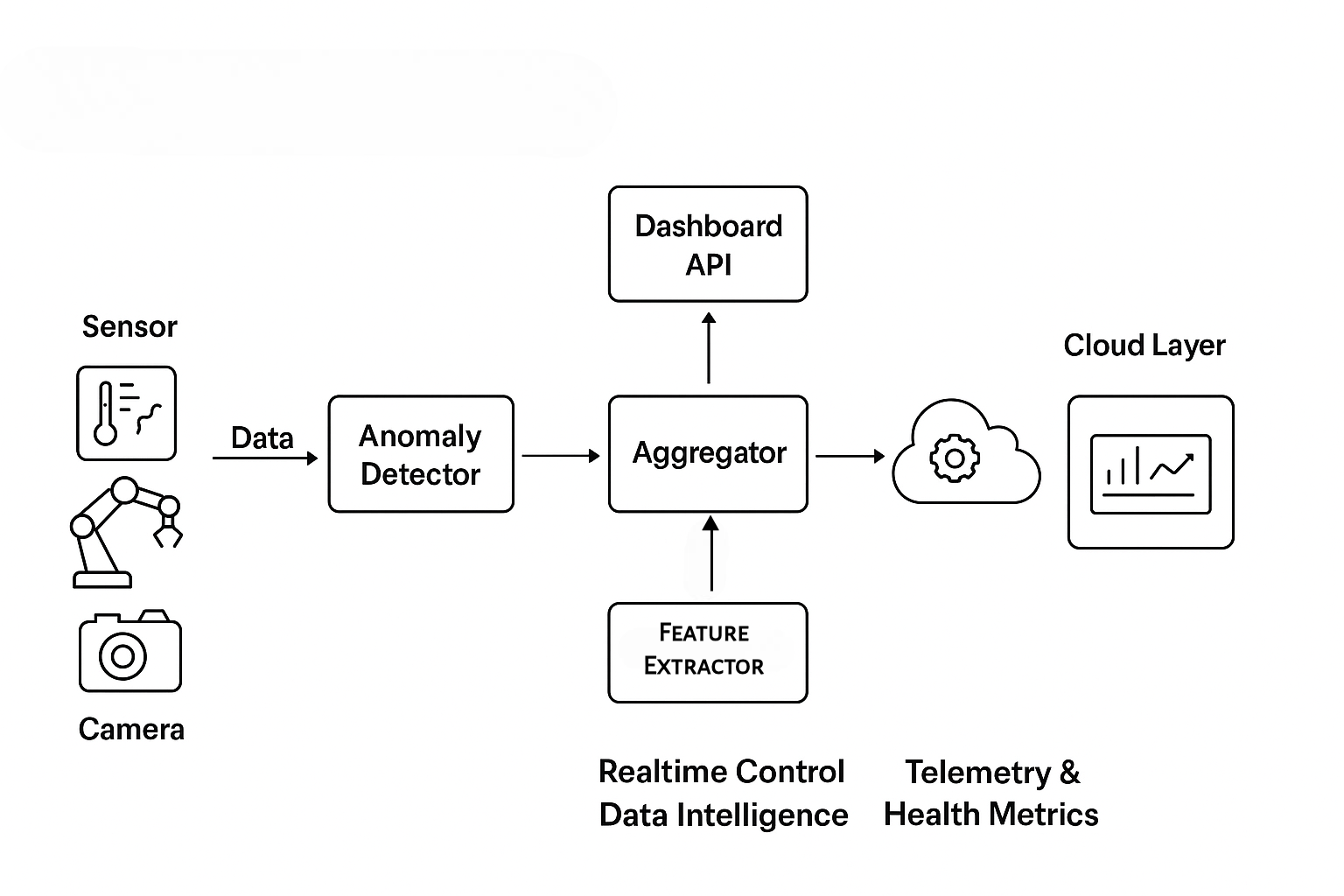

To make concrete, consider a smart factory scenario:

- In a smart factory, sensors constantly record temperature, vibration, and speed, sending massive streams of data every second. A control module must instantly react, stopping or adjusting a machine within milliseconds if it detects an issue. Over time, a predictive analytics model studies this collected data to predict failures and improve performance. A dashboard in the central system then displays factory-wide trends, helping you monitor health, efficiency, and productivity while supporting better decision-making and maintenance planning every day.

With Edge-PaaS:

- As a developer, you design an application using multiple modules like an anomaly detector, a feature extractor, an aggregator, and a dashboard API. You set latency limits, deciding that the anomaly detector must react within 10 milliseconds near the machines, while the dashboard can stay in the cloud. The system places each part intelligently across layers for the best performance. If the network goes down, edge modules keep working and store results. Once connectivity returns, data synchronizes and updates flow smoothly. Meanwhile, telemetry and health metrics travel to a central console, letting you track system health and performance. This structure ensures real-time responsiveness, efficiency, and reliability across the entire factory network.

10. Why the Future Lies with Edge-PaaS

- You live in a world where speed, data, and connectivity define success. Edge-PaaS brings computing closer to where actions happen, giving you real-time control that traditional clouds cannot match. It combines the scalability of cloud with the instant response of edge systems. You can build applications that think and react locally while staying connected globally. This balance between performance and intelligence makes Edge-PaaS a foundation for the future of real-time cloud computing and modern digital innovation.

10.1 Balancing Abstraction and Performance

- You can focus on coding and innovation instead of struggling with complex hardware or network details. Edge-PaaS hides the hard parts while still giving you the speed and precision needed at the edge. It keeps development simple but ensures systems react instantly. By blending cloud-like ease with edge-level performance, you get the best of both worlds. This balance helps you build smarter, faster applications without losing control or flexibility in the deployment process.

10.2 Enabling New Real-Time Applications

- With Edge-PaaS, you can create apps that respond in real time, such as autonomous vehicles, AR/VR systems, or smart healthcare tools. Traditional clouds cannot meet such strict latency needs. The platform brings computing closer to where events happen, making fast decision-making possible. You also gain flexibility to develop in familiar environments. This shift unlocks opportunities in areas that demand instant action, helping you explore innovative, real-world technologies faster and more efficiently.

10.3 Aligning with Hybrid-Edge Trends

- You now see cloud companies extending their services toward the edge to improve performance. Edge-PaaS fits perfectly with this movement by allowing you to deploy your applications across both cloud and edge layers using one unified system. You can run real-time tasks close to users while still using cloud power for analytics. This approach bridges the gap between two worlds, giving you a consistent and flexible way to manage workloads seamlessly across environments.

10.4 Scaling Through Automation and Orchestration

- As millions of edge devices come online, managing them manually becomes impossible. Edge-PaaS helps you handle this massive scale through automation and orchestration. You can deploy updates, manage workloads, and balance resources across hundreds or thousands of locations automatically. It ensures every node runs efficiently without your direct involvement. This scalable model saves time, reduces errors, and helps you maintain control over a distributed network of devices in real-world applications easily.

10.5 Improving Operational Efficiency

- You gain more control over resources by letting data be processed near its source. This reduces bandwidth costs and network delays, leading to faster responses. Edge-PaaS helps you manage both edge and cloud systems together, cutting down on maintenance work. You can focus more on improving application performance instead of handling infrastructure issues. The combined efficiency of edge and cloud makes your operations more sustainable, cost-effective, and capable of supporting continuous innovation effortlessly.

10.6 Future-Proofing with Emerging Technologies

- Technologies like 5G, IoT, and autonomous systems are growing rapidly, and they need instant computing power. Edge-PaaS gives you the flexibility to support these evolving systems by distributing workloads intelligently. You can handle large amounts of data while keeping response times low. As digital transformation continues, this model ensures your systems stay adaptable, responsive, and ready for the next generation of connected devices and real-time applications shaping the future.

11. Potential Roadmap & Evolution

- You can imagine the evolution of Edge-PaaS as a steady journey toward smarter, faster, and more connected systems. Over the next decade, the platform will gain automation, intelligence, and interoperability between edge and cloud. It will simplify how you deploy, monitor, and scale real-time apps. Gradually, AI-driven orchestration and self-healing systems will become common. This shift will empower you to build solutions that react instantly, manage themselves, and adapt to changing environments with greater reliability and efficiency.

11.1 Pilot platforms and vertical proofs-of-concept

- In the beginning, Edge-PaaS will appear in specific fields like telecom, manufacturing, and smart cities. You will see companies testing small projects to prove their value. These early systems will show how real-time control, local processing, and cloud coordination can work together. By focusing on a single industry first, developers can fix issues and improve reliability. As results become clear, more sectors will start adopting Edge-PaaS for faster and more efficient operations.

11.2 Open standards and abstractions

- To make Edge-PaaS flexible, developers and companies will create open standards. You will rely on common APIs and orchestration models that allow applications to run on different platforms. This prevents you from being locked into one vendor’s ecosystem. Shared frameworks make collaboration easier and innovation faster. As more systems adopt these open models, you will find it simpler to connect edge, cloud, and fog layers under one unified environment.

11.3 Convergence with cloud-native stack

- Over time, Edge-PaaS will merge with the cloud-native ecosystem. You will notice tools like Kubernetes, service meshes, and FaaS models expanding their reach to the edge. This allows you to use the same development practices you already know while improving speed and scalability. It simplifies management since both cloud and edge workloads can share the same foundation. You gain better performance without needing to learn entirely new systems or processes.

11.4 AI-driven orchestration

- Future Edge-PaaS platforms will use artificial intelligence to manage complex operations automatically. You can expect machine learning algorithms to decide where to run your workloads, when to migrate them, and how to balance resources efficiently. This intelligent control removes the need for manual tuning and ensures faster responses to changing network conditions. With AI helping to optimize placement, you will see improved reliability and performance across distributed environments.

11.5 Federated marketplace and edge marketplaces

- In the coming years, you will experience a federated marketplace where multiple edge providers work together. Developers like you will deploy applications across different networks and infrastructures seamlessly. This cooperation will allow access to a broader range of resources while maintaining control. By sharing services among various providers, you gain flexibility and choice. This approach also encourages healthy competition, driving innovation and reducing costs for users and developers alike.

11.6 Edge-native runtimes and hardware co-design

- New generations of edge hardware will bring specialized chips like TPUs and NPUs for faster processing. You will also see edge-native runtimes built to make the most of these devices. These systems will run efficiently, consuming less power while delivering high-speed computation. As software and hardware evolve together, you will develop smarter, more responsive applications. This combination ensures that edge computing remains powerful, compact, and capable of supporting future workloads

11.7 Global adoption and ecosystems

- Over time, Edge-PaaS will become as common as traditional cloud platforms. You will find a growing ecosystem of tools, frameworks, and plugins supporting it. Developers around the world will collaborate to enhance features and build real-time applications more easily. Businesses will integrate it into everyday operations, improving performance and reducing latency. As this adoption spreads, you will be part of a global shift toward faster, smarter, and more connected computing.

Conclusion

- Think of Edge-PaaS not as another technology trend but as your next-generation partner in building smarter, faster, and more responsive systems. It works beside you, extending the power of the cloud to where data is created. Together, you and Edge-PaaS can design applications that think, act, and react in real time. The question is not if this model will shape computing; it is how ready you are to use it to your advantage.

- The rise of Edge-PaaS is not a challenge; it is an opportunity to rethink how you build and deliver digital experiences. When you treat it as a collaborator, not just a platform, you gain flexibility, precision, and scale. Routine management becomes automated, giving you space to focus on innovation. You no longer need to choose between control and convenience; you can have both working seamlessly across cloud and edge.

- The real barrier is not technology but mindset. If you see Edge-PaaS only as an extension of the cloud, you miss its true power. But if you embrace it as a collaborative system, you open doors to new forms of real-time intelligence, local decision-making, and global efficiency. You create solutions that are faster, more reliable, and deeply connected to the world they serve.

- The future of computing is not cloud versus edge. It is cloud with edge, unified through Edge-PaaS. The question is simple: are you ready to welcome your most powerful new partner in innovation?