In today’s digital world, your data is one of your most valuable assets. Whether it’s customer details, transaction records, or business-critical apps, losing that data could cause serious damage, from financial loss to downtime and even reputation harm. You may think your cloud server is safe, but it’s still vulnerable to cyberattacks, accidental deletions, or hardware failures. That’s why you can’t just hope for the best—you need a plan.

The good news? You don’t have to handle backups manually. With cloud backup automation, you can protect your servers without lifting a finger every day. Just set the rules once, and your data gets copied, stored, and secured like clockwork. You’ll have peace of mind knowing you can recover fast when things go wrong.

In this guide, you’ll discover the best tools and smartest practices to automate your cloud backups. Whether you use AWS, Azure, GCP, or open-source tools, you’ll learn how to keep your data safe, your costs low, and your recovery plan rock solid. So let’s get started. It’s time to take control, automate your backups, and make sure your data is always ready when you need it most.

1. Why Cloud Server Backups Matter

1.1 Data Loss Prevention

- You can lose data because of hardware failure, accidents, viruses, or natural disasters. When that happens, you might not be able to get your files back. But if you use cloud backups, your data stays safe online. You can easily recover everything you need. It’s like saving a copy of your homework in your email—just in case your computer crashes. With cloud backups, you don’t need to panic when things go wrong.

1.2 Disaster Recovery

- If something really bad happens, like a server crash or cyberattack, you need a way to get everything working again. That’s where disaster recovery helps. By using cloud backups, you can restore your files and systems quickly. You won’t lose days or weeks trying to fix things. You’ll be back up and running quickly. It’s like hitting the “undo” button after making a big mistake—it saves time and keeps everything from falling apart.

1.3 Security Against Ransomware

- Ransomware is a type of malware that locks your files and demands money to unlock them. If it happens to you, you could lose all your work. But with a cloud backup, you don’t need to pay or worry. You can delete the infected files and restore clean ones from the cloud. This gives you a safe way to fight back against hackers. It’s like having a clean backup of your phone before it breaks.

1.4 Automatic & Regular Backups

- Sometimes, you forget to save your work or make a copy. With cloud backups, that isn’t a problem. Most cloud services do automatic and scheduled backups for you. That means your files are saved often—without you having to do anything. It’s like having your notes copied every hour by someone else. Even if you forget, your data stays up to date and safe. That way, you’ll never lose too much work at once.

1.5 Scalability and Flexibility

- As you collect more data, you’ll need more space to store it. Cloud storage grows with you. You can easily add more space without buying new equipment. That’s called scalability. And it’s flexible too—you only pay for what you use. It’s like having an expandable backpack that grows when you need it to carry more books. You’re never stuck or limited, and your data is always safe and ready when you need it.

1.6 Remote Access and Availability

- With cloud backups, you can access your data from anywhere on the internet. You don’t need to be in the same room—or even the same city—as your server. This is called remote access. If there’s an emergency or you’re working from home, you can still get your files. It’s like having your locker follow you around. As long as you have your login, your stuff is there. That makes your life easier and safer.

1.7 Cost-Effective

- Setting up your own backup system with hard drives and servers is expensive. But cloud backups don’t need that kind of equipment. You only pay for the storage you use, like a pay-as-you-go phone plan. This makes it much more cost-effective. You save money while keeping your data safe. It’s a smart way to protect your work without breaking your budget. Think of it like renting space in a digital safe instead of buying one.

1.8 Compliance and Audit Requirements

- If you’re working with sensitive data, you need to follow rules and laws that protect it. This is called compliance. Cloud backups help you follow these rules by keeping your data secure and trackable. It also helps during audits, when someone checks if you’re following proper procedures. It’s like showing your teacher that you did your homework and saved it safely. It keeps you out of trouble and shows you’re doing things the right way.

1.9 Version Control

- Sometimes you change a file and want to go back to an older version. Version control means the cloud saves different copies of your files over time. If you mess something up, you can go back and fix it. It’s like having drafts of an essay saved so you can return to the one before you made a mistake. This makes working easier and safer, especially when you’re editing important things or sharing files.

1.10 Peace of Mind

- When you know your data is safe and easy to restore, you feel more relaxed. That’s called peace of mind. With cloud backups, you don’t have to worry about losing everything to a mistake, accident, or hack. You can focus on your work or projects without fear. It’s like wearing a seatbelt—you hope you won’t need it, but it’s always there to protect you. That security lets you work better and think clearly.

2. Challenges of Manual Backups

-

Manual backups take a lot of time and are easy to mess up. You might forget to back up or do it wrong. They’re also hard to manage as your data grows—they just don’t scale well. You’ll spend more time and still risk losing important files. It’s not the best way to keep your data safe. Key challenges of manual backup processes include:

2.1 Human Error

- When you do backups by hand, it’s easy to make mistakes. You might forget to run them, type the wrong command, or mess up the settings. Even one small error can lead to only part of your files getting saved, or you might overwrite something important. Studies show that up to 60% of backups are incomplete, and half of the restores can fail. That’s a big risk when you need your data back the most.

2.2 Inconsistency

- Manual backups often don’t follow a set schedule. You or your teammates might do them at random times or forget altogether. If you add a new server and don’t document it, it might never get backed up. These gaps add up, and you could lose important files. It’s like doing homework on random days—you’ll miss something sooner or later. A backup system without consistency is not safe or reliable in the long run.

2.3 Resource Contention

- When you manually copy big files, like a database, it can use a lot of your network speed and computer power. That might slow down your main systems. If you try to do backups only when no one’s working, you might rush and miss something. This pressure causes incomplete backups or skipped steps. Just like trying to finish an essay right before the bell rings, backups suffer when there’s not enough time or resources.

2.4 Lack of Visibility

- If you don’t use a dashboard or alerts, it’s hard to know if your backup actually worked. You might assume it’s done, but it could have failed without you noticing. With no logs or notifications, mistakes stay hidden until you try to recover your data—and by then, it’s often too late. It’s like thinking you turned in your homework, but it never uploaded. You won’t know there’s a problem until you need a solution.

2.5 Scalability

- As your system grows, doing backups by hand becomes too much. Managing backups for hundreds of servers or containers is almost impossible manually. You’ll fall behind, miss things, and lose control. Automation solves this by handling large workloads for you. It’s like trying to water a whole field with a single cup—it doesn’t work. You need tools that scale with your system so you can keep everything safe without getting overwhelmed.

Summary

- In short, doing manual backups means you have to remember everything yourself, which leads to mistakes and a lot of extra work. It’s easy to forget steps, miss files, or get overwhelmed as your system grows. But when you use automation, backups happen consistently and correctly without you doing it all by hand. It also scales with your data, so even as things get bigger, your backups stay strong. It saves you time and reduces stress.

3. Benefits of Backup Automation

-

When you use backup automation in the cloud, you save time, avoid mistakes, and keep your data safe without extra effort. Backups run automatically, so you don’t have to remember them. They stay consistent, reliable, and can grow with your system. It’s an easy way to protect your files and avoid the stress of doing everything yourself.

3.1 Consistency and Reliability

- With automated backups, you don’t have to remember when to save your data. Backups happen on a set schedule, like every day at 2 AM. You just “set it and forget it.” This way, nothing is missed, and everything stays backed up without extra effort. It’s like having your phone automatically save photos to the cloud every night—you know it’s happening, even when you’re not paying attention. That makes everything more reliable and consistent.

3.2 Time and Bandwidth Efficiency

- Automated backups don’t copy everything every time. They use incremental backups, which means only changed data is saved. This saves time, uses less internet bandwidth, and needs less storage space. Some tools manage full backups in the background so you don’t have to worry about it. It’s like updating only the changed pages in a book instead of reprinting the whole thing—faster, smarter, and easier to manage over time.

3.3 Scalability

- As your system grows, automation helps you manage more servers without extra work. Cloud tools can automatically detect new resources and back them up. You don’t have to set it up every time. Whether it’s one server or a thousand, automation handles it. It’s like having a robot that cleans every room you add to your house without being told—it just knows. That makes scaling your systems much easier and more reliable.

3.4 Fast Recovery

- If something crashes, you can recover fast with cloud automation. You can quickly launch a backup image or restore a copy of your files. Cloud platforms like AWS or Google let you do this in just a few clicks. It’s even better with infrastructure-as-code, which rebuilds everything automatically. It’s like restoring your phone from a backup—you don’t lose anything, and you’re back up and running quickly, without having to redo all your setup.

3.5 Cost Optimization

- Cloud services let you save money with automated backup policies. Old backups can be moved to cheaper storage, and expired backups are deleted automatically. You don’t have to do it yourself. This reduces waste and keeps costs low. It’s like moving old school files into a cheaper storage bin instead of your main folder—clean, organized, and affordable. Automation makes sure your backup system stays smart and cost-effective without extra effort.

3.6 Security and Compliance

- Automated backups follow security rules every time. They can apply encryption and follow retention policies to meet legal or industry standards. Some tools even make unchangeable backups that meet strict compliance needs. Logs and reports are created for audits, too. It’s like locking your files in a safe that reports its status every day. You don’t have to remember every rule—the system does it for you, keeping your data safe and legal.

3.7 Centralized Monitoring

- With automation, you can monitor everything in one place. You get alerts if a backup fails, so you’re not guessing or checking everything by hand. Tools like CloudWatch or Azure Monitor help you track backup jobs easily. It’s like getting a text if your assignment wasn’t submitted—better to know right away than find out later. This keeps your backups trustworthy and saves time checking each task manually.

3.8 Freeing up Staff

- When backups run automatically, you and your team don’t have to spend time doing boring, repeated tasks. You can focus on important projects, like planning or improving systems. It’s like using a dishwasher instead of washing each plate by hand—you get time back. By removing manual steps, automation makes your work easier, smarter, and less stressful. Your team works better when they’re not stuck doing the same thing every day.

3.9 DevOps Integration

- You can include backup settings in your code, so they’re part of your system setup. Backups can run during deployments using tools like Terraform or AWS scripts. This means your backups are version-controlled and fully automated. It’s like saving your school project steps in a file so you can redo it exactly later. With this setup, backups are smooth, repeatable, and always up to date—just like the rest of your code.

3.10 Data Integrity Checks

- Cloud backup tools often check your files to make sure they’re still complete and uncorrupted. They store multiple copies and use checksums to spot errors. This gives you confidence that your data will work when you restore it. It’s like double-checking your essay to make sure nothing’s missing. You don’t want to restore a backup and find out it’s broken—these checks make sure that never happens.

Summary

When you automate backups, you make sure your data is always protected, even as your system grows fast. You avoid quiet failures, where something breaks but no one notices. With automation, you don’t have to guess—your backups are always there. It gives you confidence that your data is safe, no matter what happens behind the scenes.

4. Key Features to Look for in Backup Tools

-

When picking a backup tool, look for features that keep your data safe, are easy to restore, and are automated. Make sure it supports scheduling, encryption, alerts, and scalability. It should work with your system and grow as your needs grow. A good tool also gives you reports and logs, so you always know your backups are working right.

4.1 Flexible Scheduling & Retention

- You should be able to set custom schedules for your backups—like every night or once a week—and decide how long to keep them. This is called retention. Maybe you want daily backups for 30 days or monthly ones for a year. A good tool lets you adjust these settings easily. It’s like choosing how often to save your game and how many save files you want to keep. You’re always in control.

4.2 Broad Platform Coverage

- Your backup tool should work with everything you use—cloud servers, databases, containers, and even on-premises machines. Some tools only work with one platform, like AWS or Google Cloud, but others (like Veeam or Bacula) cover multiple platforms. This way, you don’t need different tools for each system. It’s like using one charger for all your devices—it’s simpler, saves time, and helps you manage everything in one place.

4.3 Centralized Management

- Look for a tool that lets you manage all your backups in one place. A dashboard or API that controls everything makes it easier to track your backups and apply the same rules to every system. For example, AWS Backup can apply backup policies to all your accounts automatically. This means you won’t forget any important files. It’s like having a central planner for your schoolwork—it keeps everything organized.

4.4 APIs and CLI

- A good backup tool should let you automate things using APIs (Application Programming Interfaces) or CLI (Command Line Interface). This means you can write scripts to run backups, set schedules, or connect backups to your CI/CD pipeline. If you use infrastructure-as-code, you can include backup plans in your code, too. It’s like writing one command to do your chores for the whole week—fast and repeatable.

4.5 Application-Consistent Backups

- If you’re backing up things like databases or programs, the tool needs to make application-consistent backups. That means it waits for the app to be in a safe state before backing up, so nothing breaks. Features like VSC (Volume Shadow Copy) make this possible. Without this, you could end up with a backup that doesn’t work. It’s like taking a photo of someone mid-blink—it’s better to wait for the right moment.

4.6 Deduplication & Compression

- These features help save storage space and bandwidth. Deduplication removes repeated data, and compression shrinks files. Together, they make backups smaller and faster. This means you can store more without paying for extra space or slowing down your network. It’s like packing your suitcase smartly—you fit more stuff without adding weight. You’ll spend less on storage and still keep everything backed up.

4.7 Immutable/WORM Storage

- With immutable storage, backups can’t be changed or deleted until their time is up. This protects you from ransomware and other attacks. It’s also called WORM (Write Once, Read Many). Once a backup is saved, no one can mess with it. It’s like sealing your notes in a locked box until exam day—safe from accidents, theft, or anyone trying to cheat the system.

4.8 Encryption

- Your backups should be encrypted when they’re stored and when they’re being sent. This keeps your data private and secure. Some tools even let you use your own encryption keys, so only you can unlock the backups. Think of it like putting your files in a locked digital vault with a key only you have. That way, even if someone finds it, they can’t open it.

4.9 Role-Based Access Control

- Not everyone should have full control of backups. With role-based access, you can give people specific permissions—like letting one person view backups, another person restore them, and someone else set policies. This keeps your system secure and avoids mistakes. It’s like a school giving different login rights to students, teachers, and admins—everyone has access only to what they need.

4.10 Monitoring and Reporting

- A good backup system sends you alerts if something fails and shows backup status on a dashboard. It also creates logs and compliance reports that help with audits. This way, you always know if backups are working. It’s like getting a message if your homework didn’t upload—you don’t want to find out too late. These features keep you aware and in control.

4.11 Scalability

- Your backup tool should work whether you have 10 files or 10,000. It should scale up as your systems grow. Cloud tools often handle this automatically, while self-managed ones should let you add more storage or servers easily. It’s like having a backpack that expands as you add books—you never run out of room, and you don’t need to switch tools just because you got bigger.

4.12 Fast Restore Options

- Sometimes you don’t need everything—just one file or a database table. A good tool lets you restore only what you need, fast. You should also be able to recover full systems if something crashes. It’s like being able to get just one missing assignment instead of redoing the whole semester. Quick, flexible restore options save time and reduce downtime.

4.13 Logging

- You need detailed logs of every backup and restore action. These help with troubleshooting, audits, and keeping track of what happened and when. If something fails, you can look at the log to see why. It’s like checking your revision history on a shared doc—you know who did what, and you can fix mistakes faster.

4.14 Cost Controls

- Backups can get expensive, so your tool should help you manage costs. Features like tiered storage, auto-archiving, and pay-as-you-go pricing help keep things affordable. You can also set rules to delete old backups or move them to cheaper storage. It’s like clearing old photos from your phone to free up space—you keep what’s important without overpaying for stuff you no longer need.

Summary

- When you make sure your backup tool has these features, your backups become more reliable, fast, and secure. You won’t have to worry about missing data or breaking rules. Everything works the way it should, even during a failure. It keeps your system safe and ready for any problem, while also meeting compliance and recovery needs.

5. Comparison of Top Backup Automation Tools

Below is a comparison of popular backup automation tools and services for cloud servers:

| Tool / Service | Type | Supported Cloud Platforms | Key Features (automation focus) |

|---|---|---|---|

| AWS Backup | Cloud-managed | AWS (multi-region, multi-account) | A centralized backup system for EC2, EBS, RDS, DynamoDB, EFS, and more; supports copying backups across accounts and regions; works with AWS Organizations to apply policies to the whole company; includes Vault Lock for making backups unchangeable; and lets you assign backup rules using tags on resources. |

| Azure Backup | Cloud-managed | Azure (VMs, SQL, Blob, Files) | Azure offers built-in data protection using the Recovery Services Vault, allowing you to set daily or weekly backup schedules. It stores your backups in a geo-redundant vault by default, ensuring extra safety. It also integrates with Azure Policy and Role-Based Access Control (RBAC), and supports automation through CLI and PowerShell for easier management. |

| Google Cloud Backup & DR | Cloud-managed | GCP (Compute Engine, GKE, etc.) | Google Cloud uses incremental persistent-disk snapshots to efficiently protect your VMs, bare-metal systems, file storage, and Kubernetes environments. It supports cross-project replication to keep data safe across locations, uses automated checksums to ensure data integrity, and provides centralized management so you can control everything from one place. |

| Veeam Backup & Replication | Proprietary SaaS/on-prem | AWS, Azure, GCP, VMware, Hyper-V, etc. | This solution offers agentless backup for multi-cloud virtual machines, using snapshot orchestration and an incremental-forever approach to save time and space. It includes built-in deduplication, works with object storage, and provides advanced restore options along with ransomware detection to keep your data secure and recovery-ready. |

| Bacula Enterprise | Open-source/Enterprise | AWS S3, Azure Blob, GCP Storage, On-prem | This backup tool supports policy-based full and incremental backups with global deduplication to reduce storage use. It’s designed for multi-tenant environments and works across a wide range of operating systems and applications, including VMware, Kubernetes, databases, and cloud VMs. You can manage it easily through a command line, API, or graphical interface (GUI). |

| Duplicity | Open-source CLI | Any (via S3, Azure, GCS, FTP) | This tool creates encrypted incremental backups using GPG for encryption and rsync for efficient syncing, making it perfect for Linux file systems. It can upload backups to the cloud or via SFTP, and it’s lightweight, easy to use, and fully scriptable for automation. |

| Restic | Open-source CLI | S3, Azure Blob, GCP, MinIO, etc. | This solution offers fast encrypted snapshots with content-addressable deduplication, making it efficient for saving space. It supports a wide range of backends and is commonly used in containerized cron jobs or scripts for automated backup processes. |

| Velero | Open-source (K8s) | AWS, Azure, GCP (Kubernetes) | This Kubernetes-native backup solution saves your cluster state, volumes, and configurations, with customizable scheduling. It stores backups in S3 or Blob storage and supports migrating workloads seamlessly between clusters, ensuring easy recovery and flexibility. |

| Rclone + Scripts | DIY open-source | AWS, Azure, GCP, many others | This flexible file sync tool is perfect for integrating into custom backup scripts and supports multiple protocols like S3, SFTP, and WebDAV. While it provides powerful syncing capabilities, it requires custom orchestration to fully automate and manage backup tasks. |

Summary

- Each backup option has its pros and cons. Cloud-native services are easy to use but are limited to a specific cloud. Third-party tools like Veeam and Bacula can work across multiple clouds and hybrid systems, but take more time to set up. Open-source tools are flexible and can save money, but they need more maintenance. When choosing, think about your environment’s scale, complexity, and compliance needs to pick the best solution that fits your situation.

6. Best Practices for Cloud Backup Automation

-

To ensure reliable cloud backups, follow these best practices: Set automated schedules for regular backups, use encryption for data security, and test restore processes to ensure quick recovery. Implement redundant storage to protect against failures and monitor backups for errors. Regularly review compliance requirements to stay aligned with industry standards and keep your backup strategies up-to-date. Implement the following best practices to ensure robust and reliable backups in the cloud:

6.1 Set Clear RPO/RTO:

- Define your Recovery Point Objectives (RPO), which tell you how much data loss is acceptable, and Recovery Time Objectives (RTO), which set the acceptable downtime. This helps you determine how often backups should occur and how quickly you can recover. For critical databases, backups might need to happen every hour, while for less important systems like dev servers, backups can happen less frequently.

6.2 Resource Tagging & Policy Enforcement:

- Tag your important resources (e.g., Backup: Daily or Backup: Weekly) and enforce these tags using policies. Many tools, like AWS Backup, can automatically assign resources to backup plans based on tags. This ensures your backups follow a consistent schedule, making it easier to manage and track them, especially as your infrastructure grows.

6.3 Isolated Backup Accounts:

- Store your backups in a separate cloud account or project from your production environment. This limits the risk of data loss or compromise if your production environment is attacked. Ensure you apply strict Identity and Access Management (IAM) policies and network controls to protect these backup accounts and keep them secure.

6.4 Immutable Backups:

- Enable immutable backups (WORM: Write Once, Read Many) to prevent backups from being deleted or altered. Services like AWS Backup Vault Lock and Azure immutable blob storage ensure that backups remain intact, even if there’s a cyberattack or accidental deletion, offering strong protection against ransomware or human errors.

6.5 Encryption Everywhere:

- Use strong encryption for all your backups, both in transit and at rest. Many cloud services offer automatic encryption, but you should use customer-managed keys (CMKs) for extra control if needed. Regularly rotate your encryption keys and secrets to maintain the highest level of security.

6.6 Least-Privilege Access:

- Always grant the minimum permissions necessary for backup tasks. For example, create a backup operator role that can only create or read backups, but not manage production servers. Regularly rotate credentials and enable Multi-Factor Authentication (MFA) to secure sensitive actions like deleting or modifying backups.

6.7 Automate Verification:

- Regularly schedule restore tests to ensure your backup system is working properly. Automating this process allows you to verify the integrity of your backups by restoring a sample file or VM. This ensures that your backup system works as expected and that you can recover data quickly when needed.

6.8 Monitoring and Alerts:

- Set up alerts for backup job failures or missed schedules. Use tools like CloudWatch Alarms or Azure Alerts to notify you instantly if a backup fails. Monitoring and receiving alerts help catch problems early, ensuring your backups remain consistent and reliable.

6.9 Lifecycle Management:

- Implement automated retention and lifecycle rules to manage old backups. For example, move backups older than 90 days to cheaper storage like Glacier or delete them after 2 years. This helps control backup storage costs and prevents unnecessary bloat by keeping only what’s necessary.

6.10 Document Procedures:

- Keep runbooks for backup and restore procedures. These should include detailed instructions, contact information, and recovery steps. Store them with your infrastructure code so they are always up-to-date. This ensures your team can easily follow the correct steps during an emergency.

6.11 Group Related Data:

- For multi-tier applications, make sure to back up related systems together. For example, if you’re backing up a database, ensure that you’re also backing up the application server at the same time. This keeps your data consistent and makes restores easier by ensuring everything is aligned.

6.12 Capacity Planning:

- Monitor your backup storage usage and plan for growth. Use deduplication and compression to save storage space, and ensure that your backup solution can scale as your data grows. This prevents running out of space and ensures you can handle larger datasets in the future

6.13 Compliance Audits:

- Regularly audit your backup setup to make sure it complies with relevant regulations, like GDPR or HIPAA. Use tools such as AWS Backup Audit Manager or Azure Compliance Manager to help check that your backup processes meet legal and industry requirements.

6.14 Infrastructure-as-Code:

- Define your backup vaults, policies, and schedules in code (using tools like Terraform, ARM, or CloudFormation). This makes your backup setup repeatable and version-controlled, ensuring consistency across your infrastructure and making it easier to manage as your environment evolves.

6.15 Segregation of Duties:

- Where possible, separate roles for backup tasks. One person can set backup policies, while another can test or approve them. This separation reduces risks and ensures that no single person has too much control over the backup process, enhancing security and accountability.

6.16 Communication Plan:

- Have a communication plan for backup failures and disaster recovery (DR) events. Set up dedicated channels like a Slack channel or email list to notify the team immediately when something goes wrong. This way, the right people know who to contact and can act quickly.

6.17 Multi-Cloud DR:

- For added protection, consider replicating backups across multiple cloud providers. This ensures your backup data is safe even if one provider experiences a failure. While it might need custom tools, multi-cloud disaster recovery (DR) provides extra resilience and helps minimize downtime.

6.18 Role Review and MFA:

- Periodically review the IAM roles associated with backup tasks to ensure they still meet your needs. Enforce MFA for critical operations like deleting backups to add an extra layer of security. Regular role audits help maintain proper access control and protect against unauthorized actions.

6.19 Automation Training:

- Train your team on the backup tools and automated processes. By ensuring everyone understands the backup system, you help them respond faster during an incident. Regular training helps avoid mistakes and ensures that backup tasks run smoothly and effectively during a disaster.

6.20 Regular Audits:

- Conduct regular backup audits to review your backup configurations. Ensure new critical systems are added to the backup plan and old systems are removed. As your infrastructure changes, it’s important to adjust policies and ensure that backups remain aligned with your evolving needs.

Summary

By following these practices, your automated backups become a strong and trusted part of your cloud strategy, not just something you remember last minute. You make sure backups happen by policy, not by chance. This gives you peace of mind, knowing your data is safe, easy to recover, and always protected.

7. CLI and Configuration Examples

-

Many backup tasks can be done using scripts, command-line tools, or APIs. This lets you automate your work and save time. For example, you can schedule backups, check logs, or restore files using simple commands. This makes your backup process faster, more reliable, and easier for you to control and manage. Example:

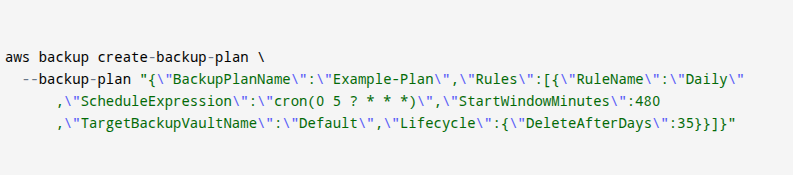

7.1 AWS CLI:

- Use the “

aws backup” commands. For example, create a backup plan with a daily rule:

- This command helps you create a backup plan in AWS using the command line. You give your plan a name (Example-Plan) and tell it to run daily at 5 AM using a cron schedule. You set a backup window of 8 hours (480 minutes), store backups in the Default vault, and tell AWS to delete backups after 35 days. You use this to automate your backups and make sure they happen on time without missing anything.

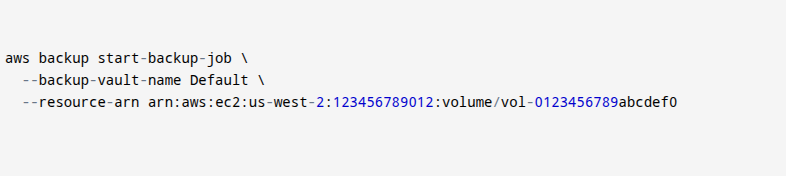

- Trigger a backup job for a resource (e.g., an EBS volume):

- This command lets you start a backup job in AWS from the command line. You’re telling AWS to back up a specific resource, like a storage volume (shown by the long ARN). The backup will be stored in the Default vault. You use this when you want to manually back up something important right away. It gives you control and makes sure your data is safe, even if your usual schedule hasn’t run yet.

Note:

- You can also list jobs (

aws backup list-backup-jobs) or describe vaults via CLI to integrate into scripts or CI/CD pipelines.

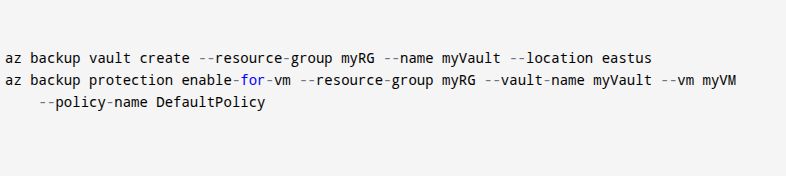

7.2 Azure CLI / PowerShell:

- This code helps you set up backups in Microsoft Azure using the command line. The first line creates a backup vault called myVault in the eastus region inside a resource group named myRG. The second line turns on backup protection for a virtual machine (VM) named myVM, using the DefaultPolicy. You use this to automate VM backups, keep your data safe, and make sure your virtual machine is always protected without doing it manually.

Note:

- In PowerShell, similar commands (

New-AzRecoveryServicesVault,Enable-AzRecoveryServicesBackupProtection) are available. You can then start immediate backups withaz backup protection backup-nowor schedule via policy.

7.3 GCP gcloud:

- This command helps you create a snapshot in Google Cloud using the command line. You are making a backup copy called snapshot-01 from a disk named my-disk that’s located in the us-central1-a zone, and you choose the STANDARD snapshot type, which is a normal full backup. You use this to protect your data, so if something goes wrong, you can restore your disk. This makes your backup process easier and more reliable without needing to do everything by hand.

Note:

- This takes a snapshot of

my-diskthe specified zone. For the Backup & DR service, you can usegcloudcommands or REST APIs to create backup plans and schedules. GCP also supports exporting snapshots to other projects for cross-account backups.

7.4 Restic (open-source example):

- This command helps you use Restic, a backup tool, to back up files from your app. It tells Restic to save everything inside the folder /var/lib/myapp to an S3 bucket at s3.amazonaws.com/mybucket/backups. The -r flag tells it where to store the backup. You use this command to make sure your app’s data is safe, encrypted, and stored in the cloud, so you can restore it later if something goes wrong.

7.5 Velero (Kubernetes example):

- This command uses Velero, a tool for backing up Kubernetes clusters. It tells Velero to create a backup named daily-backup and to run it every day at 2 AM using a cron schedule. You use this to make sure your Kubernetes data, like volumes and configurations, is saved automatically. This helps protect your apps and settings so you can restore everything if needed. It makes your backup process easier, more reliable, and fully automated.

Summary

- These examples show how you can turn backup tasks into code. By putting these commands into scripts or Infrastructure as Code (IaC) templates, your backups become repeatable and easy to track. This means you can run the same backup anytime, and others can see what you did, making your backups more organized and reliable.

8. Security and Compliance Considerations

-

Your backups hold important and private data, so keeping them secure is very important. You need to use strong encryption, set the right permissions, and follow compliance rules like HIPAA or GDPR. If you don’t protect backups, hackers or mistakes can cause data loss. Always treat backups like real data—lock them down and check them often.

8.1 Data Encryption:

- You must encrypt your backups so no one can read them without permission. This means locking your files both when they’re saved (at rest) and while being sent (in transit). Use cloud key management systems (KMS) or special devices called HSMs. Always rotate your encryption keys often. If you lose your keys, you might never get your data back.

8.2 Access Control:

- You should give people only the minimum access they need, called least privilege. For example, you can create special roles that are only allowed to back up or restore data, but nothing else. Avoid using admin accounts for backup tasks. If possible, store backups in separate cloud accounts or projects to improve safety.

8.3 Immutable Backups:

- Use write-once-read-many (WORM) settings to make sure no one can delete or change your backups. Services like AWS Backup Vault Lock or Azure immutable storage can do this. This is super helpful if someone accidentally deletes something, or if ransomware tries to ruin your files.

8.4 Logging and Auditing:

- Always turn on logging to track who does what with your backups. Tools like AWS CloudTrail or Azure Activity Logs help you see if someone tried to delete or restore something. Check these logs regularly to catch bad behavior before it becomes a big problem.

8.5 Compliance Frameworks:

- If you work with sensitive info, your backups must follow rules and laws like HIPAA, GDPR, or PCI-DSS. Services like AWS Backup Audit Manager and Azure Policy help you prove that you’re following these laws. This keeps you out of trouble and builds trust.

8.6 Secure Storage:

- Always store backups in places that are locked down and private. Use bucket policies to keep them safe and add things like versioning or Object Lock to prevent tampering. Some services even let you use MFA (multi-factor authentication) to delete backups, which adds extra security.

8.7 Data Residency:

- You must keep backups in approved locations, especially if your data is covered by local laws. Some countries require that your info stay within their borders. You should also tag your backups so you know what kind of data they hold and where they can be stored.

8.8 Key and Credential Rotation:

Change your login info and encryption keys often so hackers can’t get in. Use secret managers like AWS Secrets Manager or Azure Key Vault to store your sensitive data. This way, your backups stay safe, and you don’t lose control of your access settings.

8.9 MFA for Critical Ops:

Add multi-factor authentication (MFA) when doing super important things like deleting backups or changing rules. This means you need a second confirmation, like a code from your phone, before anything dangerous happens. It adds a human checkpoint to stop mistakes or attacks.

8.10 Offline/Long-Term Storage:

For very important data, you should keep an offline copy. That means storing backups away from the internet, like on tapes or in cold storage. This protects you if your cloud system crashes or is attacked.

8.11 Immutable Ledger:

If you’re in a high-security setting, use blockchain or ledger systems to timestamp your backups. These create records that can’t be changed, so you know exactly what happened and when. It’s useful if you ever need proof during an investigation.

8.12 Regular Audits:

You need to check your backup setup regularly. Make sure everything important is protected and that no one has changed the rules without permission. If you can, automate these checks so you don’t miss anything.

8.13 Segregation of Duties:

Don’t let one person do everything. Have different people set up backups and restore data. This way, you avoid conflicts of interest and add more security layers to the process.

8.14 Training:

You and your team need to know how your backup system works. The better you understand it, the faster you can fix problems and recover data during a crisis. Practice helps you stay calm and ready when something goes wrong.

Summary

By using strong encryption, keeping backups in separate accounts, turning on immutable storage, and doing regular audits, you make sure your backups stay safe and follow the rules. These steps help you protect sensitive data and stay compliant with your school, company, or government’s security requirements. It’s about staying ready and responsible.

9. Disaster Recovery Planning and Testing

-

Backups are just one part of your full Disaster Recovery (DR) plan. You need to think about how to recover fast, where to store extra copies, and how often to test everything. If a system crashes, you should be able to restore it quickly. A strong DR plan makes sure you’re always prepared for surprises. Here are some key points:

9.1 DR Strategy Definition

- You need a clear plan for what happens if something breaks. Decide where you’ll go next — maybe a backup cloud or region. Figure out how much downtime you can accept (RTO) and how much data loss you can tolerate (RPO). These help you decide how often to back up and how fast you must recover. If your system is super important, you’ll need frequent backups and quick restores.

9.2 Geographic Redundancy

- You should store backups in different regions to stay safe if a whole data center fails. This is called cross-region backup. For example, AWS can automatically copy backups to another region or account. Azure and Google Cloud let you restore backups somewhere else, too. This makes sure your data survives even if there’s a regional outage, like a storm or power failure.

9.3 Automated Recovery Workflows

- During a disaster, things move fast. You can use scripts or templates (like Terraform or CloudFormation) to automatically bring your systems back. These automated recovery steps help avoid mistakes and save time. It’s better than setting everything up manually when you’re stressed. This way, you can rebuild everything fast and be sure it’s done right.

9.4 Regular DR Drills

- You should test your disaster plan regularly. Run DR drills, like restoring backups in a test environment. Record how fast you recovered (RTO) and what went wrong. This helps you fix weak spots in your plan. Just like fire drills, practice helps you stay calm and act fast when something bad really happens.

9.5 Tiered Restores

- Not everything needs to come back at once. Start with your most important systems, like databases and core apps. Then restore less critical stuff later. This is called a tiered restore strategy. It helps you meet your business recovery goals. You bring back the stuff you need first, then focus on the rest.

9.6 Multiple Recovery Points

- It’s smart to keep several versions of your backups. If your latest one is bad (maybe hit by ransomware), you can use an older backup. Having multiple recovery points gives you options and helps avoid total data loss. It’s like having more than one save file in a video game.

9.7 Offline/Cold Backups

- For extra safety, keep one backup offline or off-site. That means it’s not connected to the internet, so hackers or malware can’t reach it. You could use a device like AWS Snowball or an encrypted external drive stored somewhere safe. This gives you a last resort if everything else fails.

9.8 Communication Plan

- You need a way to contact your team fast during a disaster. Set up alerts with tools like Slack, PagerDuty, or email. Your plan should include a contact list and who does what during an emergency. This makes sure everyone knows their job and helps your team stay organized and calm.

9.9 Audit Trails

- Keep a record of every backup and restore. These logs show who did what and when. After a disaster, they help you understand what went wrong. Audit trails are like evidence, and they also prove that your team followed the right steps during recovery.

9.10 Multi-Cloud DR

- For maximum protection, you can copy backups to a different cloud provider. If one provider has a major issue, you still have your data. This setup is called multi-cloud disaster recovery. It’s harder to set up and manage, but it protects you even if a whole cloud system goes down.

9.11 Infrastructure Recovery

- Some cloud providers offer tools to help recover whole systems. For example, AWS Elastic Disaster Recovery or Azure Site Recovery can spin up virtual machines and apps automatically. These services make restoring faster and reduce how much of work you have to do during a disaster.

9.12 Post-Recovery Testing

- After restoring from a backup, you must test everything. Make sure the app works and all data is safe and complete. If you had a real disaster or just a drill, write down what worked and what didn’t. Then update your plan, so you’re even more ready next time.

Summary

- A good DR plan, supported by automated backups and regular tests, helps you bring back important services fast when something goes wrong. You stay prepared, avoid long downtime, and make sure your data is still safe. With a solid plan, you can recover quickly and keep things running smoothly, even after a big failure.

10. Future Trends in Cloud Backup Automation

10.1 AI-Driven Predictive Backups:

- You use AI and machine learning to watch your systems and guess when something might go wrong, like a server crash or hardware failure. This lets your backup tools automatically save your data right before the problem happens. You don’t need to wait for errors or do everything by hand. These predictive backups help you stay ahead, reduce downtime, and protect important data before it’s lost. It’s like having a smart assistant guarding your files.

10.2 Edge Computing Backups:

- You collect data from IoT devices like sensors or cameras, often far from data centers. With edge computing backups, your data gets saved in real time right at the edge, where it’s created. This protects your information even if the network goes down or the device is damaged. It’s helpful when you use lots of small, smart devices. These backups keep your system safe, fast, and always ready to recover without relying on cloud storage far away.

10.3 Blockchain for Integrity:

- You can use blockchain to make sure no one tampers with your backup history. Every time a backup happens, it’s recorded in a decentralized ledger that no one can secretly change. This gives you a secure and traceable log of every backup event. It’s really helpful when you need to follow rules (like compliance laws) or prove that your backups haven’t been altered. With blockchain, you get strong data integrity and extra security confidence.

Conclusion

In today’s fast-paced world, automating your cloud backups isn’t just a smart move—it’s a must. You rely on data every single day, and one unexpected disaster—whether it’s hardware failure, cyberattack, or human error—can put everything at risk. That’s why you need to protect your files using automated cloud backup tools like AWS Backup, Duplicati, or Veeam. By following the best practices—like using encryption, keeping multiple copies, and regularly testing restores—you make sure your data stays safe, accessible, and fully recoverable. You also reduce manual work, cut costs, and sleep better knowing your information is backed up in a secure, policy-driven way.

Start simple: test out a free tool like Duplicati or try AWS Backup’s pay-as-you-go model. It takes only a few minutes to set up, and it gives you peace of mind for years to come. So don’t wait until something goes wrong. Take action today. Automate your backups, lock down your data, and make disaster recovery one less thing to worry about. Your future self will thank you.