In today’s cloud-first landscape, you’re expected to move faster, smarter, and more securely than ever before. Migrating workloads—from on-premises data centres to cloud environments or even between clouds—is no longer a simple lift-and-shift job. It’s a strategic transformation where every decision matters. Traditional manual methods often fall short, struggling to keep pace with the speed and complexity of modern infrastructure.

This is where event-driven automation makes a significant difference. Instead of waiting on scheduled scripts or human intervention, your migration reacts instantly to system triggers, user actions, or infrastructure changes. By combining Infrastructure as Code (IaC) with modern APIs, you can build reactive, resilient, and scalable pipelines that redefine how migration works.

In this blog, you’ll explore how to architect these pipelines using proven DevOps practices, discover the tools that power them, and walk through real-world scenarios to see how automation can elevate your cloud strategy.

1. What Is Event-Driven Automation in Cloud Migration?

- Event-driven automation means your system performs tasks automatically when certain events happen. During cloud migration, this helps you move data or adjust settings the moment a change is detected—like when a file is updated or a server goes down. You don’t have to do it manually; the system reacts for you, saving time and effort. In the context of cloud migration, this means:

a. Detecting a VM shutdown

- You can set up event-driven automation to detect when a Virtual Machine (VM) shuts down. This means your system watches for a signal that a VM has stopped. When this happens, it can automatically trigger actions like starting a backup VM, sending an alert, or reallocating resources. You don’t need to monitor it constantly—the system reacts for you. This ensures that your services stay available and that you know exactly when something needs attention.

b. Identifying a service endpoint going live

- When a new service endpoint goes live—meaning it becomes available to users—your system can detect this event. With event-driven automation, you can automatically update routing tables, inform monitoring tools, or even trigger security scans. You won’t have to remember to do all this manually. The system takes care of it the moment the endpoint is ready, helping you keep your cloud migration smooth and ensuring new services work as expected.

c. Recognizing a database being replicated

- If your database gets replicated—copied from one server to another—event-driven automation can recognize when this happens. This lets you automatically verify the copy, adjust load balancers, or alert your team that replication is complete. You don’t need to sit and wait. The system watches for the event and reacts instantly. This helps you make sure your data is safe, consistent, and ready to use during and after the cloud migration.

d. Reacting to IaC changes like Terraform plan/apply

- When you use Infrastructure as Code (IaC) tools like Terraform, you make changes to cloud infrastructure through code. When a Terraform plan or apply runs, event-driven automation can detect it and trigger related tasks—like updating documentation, running compliance checks, or notifying your team. You don’t have to manually follow up every time. This makes your migration process faster, safer, and more organized, helping you avoid errors and keep track of all infrastructure changes.

- Instead of using polling (constantly checking for changes) or running batch scripts on a schedule, your pipeline becomes reactive. This means it automatically triggers tasks the moment something in the system changes. You don’t have to wait or check manually—your system responds instantly, keeping your cloud migration faster, smarter, and more efficient.

1.1 Example Scenario

- Here’s an example scenario: Imagine your database backup just finished in your source cloud. With event-driven automation, this event can instantly trigger your pipeline to start provisioning new resources in the target cloud. It can also copy the data and configure the network—all by itself, with no need for you to step in. This helps your cloud migration move forward smoothly and quickly. You simply set the rules, and the system does the work.

2. Why Cloud Migration Needs Reactive Pipelines

2.1 Cloud migrations are often:

a. Multi-phased: Lift-and-shift first, then refactor

- During a cloud migration, you often move your system in multiple phases. First, you do a lift-and-shift—this means you move everything as it is, without changing much. After that, you refactor, which means you update and improve your apps to fit better in the cloud. Doing it in phases makes the process easier and less risky. You don’t have to move and change everything at once—you take it step by step.

b. Dependency-heavy: DBs, APIs, file systems, storage volumes

- Your system depends on many things, like databases (DBs), APIs, file systems, and storage volumes. These are called dependencies. During cloud migration, you must move these carefully so that everything works together. If you forget one piece, the whole system might fail. That’s why migrations are dependency-heavy—you need to track what connects to what and move it in the right order. Automation can help you handle these complex relationships with less stress.

c. Time-sensitive: Some services must migrate in defined windows

- Some parts of your system are time-sensitive—you can only move them during certain time windows. For example, a busy online store can’t go offline during the day, so you might migrate it at night. This means you have to plan your cloud migration very carefully. You must be ready to start and finish at exactly the right time. Event-driven automation helps here by making sure the right actions happen instantly, keeping you on schedule.

2.2 With event-driven pipelines, you can:

a. Eliminate wait time and delays

- With event-driven pipelines, you can eliminate wait time and delays. Instead of waiting for a schedule or someone to start a task, your system reacts instantly when something happens—like a file update or server change. This makes your cloud migration much faster. You don’t waste time checking if something is ready—the system knows and acts for you. This keeps your migration moving smoothly and saves you valuable time.

b. Reduce manual interventions

- An event-driven pipeline helps you reduce manual interventions. You don’t have to click buttons or run scripts every time something changes. Instead, your system automatically handles tasks like copying data, updating configurations, or restarting services. This lowers the risk of human error and frees up your time to focus on bigger things. You simply set up the rules once, and your pipeline keeps working for you throughout your cloud migration.

c. Improve consistency and rollback capabilities

- With event-driven pipelines, you can improve consistency and make it easier to rollback changes if something goes wrong. Every time an event happens, the same exact steps run—no forgetting or skipping tasks. If a problem occurs, you can also trigger a rollback to undo changes and return to a safe state. This keeps your cloud migration more reliable and predictable. You gain more control and can handle issues faster and with less stress.

d. Dynamically handle failures or unexpected changes

- An event-driven pipeline lets you dynamically handle failures or unexpected changes. If something goes wrong—like a server crashing or a file failing to copy—the system can automatically respond by retrying, sending alerts, or switching to backups. You don’t have to manually monitor everything. The pipeline reacts for you, helping keep your cloud migration on track even when problems pop up. This makes your migration process smarter and more resilient.

- This gives you a low-downtime, high-efficiency path for your cloud migration. Your system stays online as much as possible, so users aren’t affected. At the same time, tasks happen quickly and automatically, which saves you effort. You don’t need to worry about long waits or slow processes—your migration moves forward smoothly and efficiently with minimal interruptions.

3. Key Concepts: Events, Triggers, APIs, and IaC

3.1 Events

- An event is a change or action that happens in a system. It could be something like a file upload, a VM shutdown, or a completed task. Your system can detect these events and react automatically. You don’t have to do everything yourself—event-driven automation helps your system stay smart and responsive during cloud migration. Examples:

a. File uploaded to S3

- An event happens when a file is uploaded to an S3 storage bucket. Your system can detect this and instantly trigger tasks—like copying the file, updating records, or sending an alert. You don’t have to check it manually. The system reacts to the upload event, making your cloud migration faster and more automated.

b. VM shutdown

- A VM shutdown is another event. When a Virtual Machine (VM) stops, your system notices and can automatically trigger actions—like starting a backup VM, freeing up resources, or sending a notification. You don’t need to watch the VM constantly. Event-driven automation helps your system react right away and keeps your cloud migration running smoothly.

c. Terraform apply completed

- When a Terraform apply is completed, it’s also an event. Terraform is a tool that updates your cloud setup using code. Once it finishes making changes, your system can automatically start new tasks—like testing the new setup, updating dashboards, or sending alerts. You don’t have to trigger this yourself. The system responds the moment the apply event is done.

3.2 Triggers

- A trigger tells your system what to do when an event happens. Think of it like an automatic instruction. For example, if a file is uploaded, your system can trigger a data validation function to check if the file is correct. You don’t have to run checks yourself—the system follows the trigger and acts instantly. Triggers help make your cloud migration faster and more reliable because important tasks happen automatically. You set the rules once, and your system keeps watching and reacting to events the way you want, without missing a beat.

3.3 APIs

- APIs (Application Programming Interfaces) let different tools and systems talk to each other. In cloud migration, you often use RESTful APIs provided by AWS, Azure, or GCP. These APIs help you start or stop servers, move data snapshots, configure firewalls, and more—all through automated commands. You don’t have to do each task manually. By using APIs, your system can perform important cloud actions automatically, making your migration faster, more efficient, and less error-prone.

3.4 Infrastructure as Code (IaC)

- Infrastructure as Code (IaC) lets you define your cloud setup—like servers, networks, and storage—using code instead of doing it manually. Tools like Terraform, Pulumi, or AWS CDK help you write this code. When you change the code, it can create an event that drives your automation pipeline. For example, after a code change, your system might update servers or apply security rules. This makes your cloud migration more consistent, repeatable, and much easier to manage.

4. Architecture of an Event-Driven Cloud Migration Pipeline

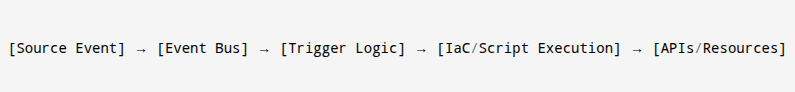

- In an event-driven pipeline, your system reacts automatically to changes. Instead of waiting for you to trigger tasks, each part of the pipeline responds to events—like file uploads or server updates. You’ll use layers like an event bus, orchestration tools, and execution units to make your cloud migration faster, smarter, and more efficient.

4.1 Event Source

- An event source is where your system detects important changes or actions. This could be cloud logs that record everything happening in your cloud, Git events from your Infrastructure as Code (IaC) updates, or monitoring tools that watch your system’s health. When an event—like a server shutting down or new code being pushed—happens, the event source captures it. This event then starts your event-driven pipeline, helping your cloud migration move forward automatically and in real time.

4.2 Event Bus

- An event bus is like the central messenger in your system. It takes events from your event source and delivers them to the right tools or services. You can use tools like AWS EventBridge, Google Eventarc, or Azure Event Grid for this. The event bus ensures that when something happens—such as a VM shutting down or a database update—every system that needs to respond does so immediately. It keeps your cloud migration pipeline running smoothly and efficiently.

4.3 Orchestration Layer

- The orchestration layer helps your system know which tasks to do and in what order after an event happens. Think of it like a conductor for an orchestra. Tools like Step Functions, Argo Workflows, or Temporal manage this. For example, if a database copy finishes, the orchestration layer can trigger tasks like updating firewall rules or testing services. You don’t need to handle this manually—event-driven orchestration keeps your cloud migration flowing in the right sequence automatically.

4.4 IaC Layer

- The IaC (Infrastructure as Code) layer lets you manage your entire cloud setup with code instead of clicking through cloud consoles. Tools like Terraform, Pulumi, or Crossplane help you write this code. When you change your code, those changes become events that can drive your automation pipeline. For example, applying new network settings can automatically trigger security tests. This makes your cloud migration more consistent, repeatable, and much easier to manage—just by updating code.

4.5 Execution Units

- Execution units are where your pipeline actually does the work. They can be Lambda functions (small bits of code that run automatically), containers (lightweight apps), or scripts. When an event triggers them, these units perform tasks—like copying data, configuring networks, or updating databases. You don’t have to do this by hand. With execution units, your cloud migration runs faster and more accurately, since tasks happen automatically the moment they’re needed.

4.6 API Layer

- The API layer lets your system talk to cloud services through tools like REST or GraphQL APIs. This layer is how your pipeline manages resources—like starting or stopping servers, configuring firewalls, or moving data. When an event happens, the system can call these APIs to make the right changes. You don’t need to log in and click around. The API layer helps your cloud migration happen in a fast, automated, and controlled way.

5. Setting Up the Event Sources

- To set up automation, you need to define clear event sources. These are the signals your system watches for—like cloud resource changes, code updates, or app activities. When an event happens, your pipeline knows how to react. By setting up the right event sources, you make sure your cloud migration runs automatically, smoothly, and with fewer manual steps.

a. CloudWatch / Azure Monitor: Detect resource state changes

- You can use tools like CloudWatch (for AWS) or Azure Monitor to detect changes in your cloud resources. For example, if a server stops, a database updates, or a network setting changes, these tools capture the event. Your system can then react automatically—like triggering backups or sending alerts. By setting this up, you ensure your event-driven cloud migration responds quickly and accurately to changes in your cloud environment.

b. Git Events: Detect push/merge for IaC repos

- When you update your Infrastructure as Code (IaC), changes happen through Git events. If you push new code or merge a pull request, your system can detect this event. It can then automatically trigger tasks like rebuilding infrastructure, running tests, or applying security settings. You don’t have to start these tasks manually. By connecting your Git events to your event-driven pipeline, your cloud migration stays in sync with your latest infrastructure code.

c. Custom Events: App signals, DB triggers, service metrics

- Sometimes you need custom events that fit your unique apps. These could include app signals (like a user action), database triggers (like new data inserted), or service metrics (like CPU usage spikes). You can set your system to watch for these events and trigger actions—such as scaling resources or optimizing performance. This makes your event-driven cloud migration even smarter and more adaptable because it can respond to what’s happening inside your apps and services.

5.1 Configure your event rules to capture things like:

a. EC2 Instance terminated

- You can set an event rule to detect when an EC2 instance (a type of virtual server in AWS) is terminated—meaning it’s stopped and deleted. When this happens, your system can automatically trigger actions like updating security settings, reallocating resources, or sending alerts. You don’t have to watch this manually. This makes your cloud migration smarter and ensures your system stays up-to-date with what’s running—and what’s not—in your cloud environment.

b. Storage bucket created

- You should also configure an event rule to catch when a new storage bucket (a place to store files) is created. When this event happens, your system can automatically trigger tasks—like applying security settings, enabling logging, or syncing data. You don’t need to remember to do these things manually. With this event rule, your cloud migration stays secure, organized, and ready to handle new storage as soon as it’s created.

c. Terraform apply success message

- An important event to capture is a Terraform apply success message. This happens when your Infrastructure as Code (IaC) changes have been successfully applied. Once this event is detected, your system can automatically trigger next steps—like running tests, updating monitoring dashboards, or notifying your team. You won’t need to check manually. This makes your event-driven cloud migration more efficient and ensures your infrastructure changes lead smoothly into the next phase.

6. APIs as the Backbone of Real-Time Cloud Operations

- Cloud APIs are essential to dynamic migrations. Example use cases:

a. AWS CLI/API to snapshot volumes

- You can use the AWS CLI or AWS API to create snapshots of your storage volumes. A snapshot is like a backup of your data at a certain point in time. With an API call, your system can take snapshots automatically—without you clicking anything. This is super useful during a cloud migration, helping you keep your data safe and ready to move. You can also use snapshots to quickly recover if something goes wrong.

b. Azure REST API to update DNS

- You can use the Azure REST API to update your DNS settings. DNS helps direct users to the right servers. When you migrate services to the cloud, you often need to update DNS so that traffic goes to your new setup. With an API call, your system can do this automatically when an event happens—no manual updates needed. This helps keep your cloud migration fast, smooth, and ensures users always reach the correct service.

c. Google Cloud API to manage IAM roles

- You can use the Google Cloud API to manage IAM (Identity and Access Management) roles. IAM roles control who can access what in your cloud. During a cloud migration, you might need to update roles to give the right people or apps the right permissions. With an API call, your system can adjust these roles automatically as part of your migration process. This keeps your cloud setup secure and ensures access is always correct.

6.1 Automation via APIs

Explanation of the above code:

- This curl command is used to make an API request that starts moving a Virtual Machine (VM) from one cloud region to another. It sends a POST request to the cloud provider’s /vm/migrate endpoint. The

Authorizationheader includes your security token so the system knows you’re allowed to do this. The data section tells the API which VM to migrate (abc123) and where to move it (us-east1 region). This type of API call lets your cloud migration happen automatically, without needing manual steps.

- You can use SDKs like Boto3 (for AWS) or the Azure SDK in your Lambda functions or container scripts. This lets your code safely and easily talk to cloud services. It also makes your tasks scalable—they can handle more data or users without extra effort. Using SDKs helps your cloud migration run securely and automatically.

7. Integrating Infrastructure as Code (IaC)

- Infrastructure as Code (IaC) lets you define your cloud setup using code instead of clicking through menus. This makes your migration process declarative—you describe what you want—and repeatable—you can run the same setup again anytime. Using IaC, you make your cloud migration more reliable, consistent, and easy to manage with just a few code updates.

7.1 Popular IaC Tools

a. Terraform

- Terraform is one of the most popular and widely used tools for Infrastructure as Code (IaC). It’s cloud-agnostic, meaning you can use it with any cloud provider—AWS, Azure, GCP, and more. You write code to describe what your cloud setup should look like, and Terraform builds it for you. This makes your cloud migration easier because you can create, update, and manage your infrastructure in a repeatable and automated way—across multiple cloud platforms.

b. Pulumi

- Pulumi is an IaC tool that lets you write your infrastructure code in real programming languages like Python, JavaScript, or Go. Instead of using a special config language, you use the language you already know. This makes your code more flexible and easier to integrate with other tools. During cloud migration, Pulumi helps you automate building your cloud environment while keeping your infrastructure versioned, testable, and easy to modify with familiar coding practices.

c. AWS CDK

- The AWS CDK (Cloud Development Kit) is an IaC tool designed for building AWS cloud infrastructure using real programming languages like TypeScript or Python. You write code to define your infrastructure instead of using templates. The CDK then converts it into AWS CloudFormation for deployment. This makes your cloud migration faster and more customizable. You can easily add logic, loops, and conditions to your infrastructure, making it powerful for building and managing AWS resources.

d. Crossplane

- Crossplane is a Kubernetes-native IaC tool. This means you manage your cloud infrastructure using Kubernetes itself. You write configuration files, and Kubernetes takes care of creating cloud resources like databases or networks. This is great if you already use Kubernetes for your apps. During cloud migration, Crossplane helps you keep your infrastructure and applications managed in one place. It also supports multi-cloud environments, giving you powerful, unified control across different cloud providers.

7.2 Event-Driven IaC

a. Trigger pipeline step on terraform apply

- When you run terraform apply, your system applies Infrastructure as Code (IaC) changes. You can set an event trigger so that once this step finishes, your pipeline automatically starts the next tasks—like running tests, updating dashboards, or deploying apps. You don’t need to start these steps manually. This makes your cloud migration more automated, efficient, and ensures your infrastructure and applications stay in sync as soon as changes are made.

b. Auto rollback on plan failure

- If your Terraform plan or apply fails, you can set your system to auto-rollback changes. This means your pipeline automatically undoes any partial changes and brings your infrastructure back to a known safe state. You won’t have to fix things manually. Using event-driven IaC with auto rollback makes your cloud migration more reliable and error-proof, helping you avoid broken setups or downtime caused by failed deployments or configuration mistakes.

c. Notify Slack on drift detection

- You can configure your system to notify your team in Slack when it detects drift—which means your actual cloud setup doesn’t match what’s written in your IaC code. An event-driven pipeline can automatically send this alert so you can take action right away. This helps you keep your cloud migration and infrastructure consistent and secure, making sure everything matches your expected setup and no unexpected changes go unnoticed.

8. Event Processing with Serverless and Queues

- To handle lots of events or events that happen at different times (asynchronous), you can use serverless functions and queues. Serverless functions run your code automatically when an event happens. Queues like SQS or Kafka help manage event flow so your system stays stable. This makes your cloud migration more scalable, reliable, and automated.

a. AWS Lambda / Azure Functions / Cloud Functions for execution

- You can use serverless functions like AWS Lambda, Azure Functions, or Google Cloud Functions to run small bits of code automatically when an event happens. You don’t need to manage servers—they scale automatically and only run when needed. For cloud migration, these functions help you perform tasks like copying data, updating settings, or sending alerts. Using serverless execution keeps your migration pipeline lightweight, fast, and cost-effective, handling events as soon as they occur.

b. SQS / PubSub / EventBridge / Kafka to queue and buffer events

- When handling lots of events, it’s smart to use a queue to manage them. Tools like SQS (AWS), Pub/Sub (GCP), EventBridge (AWS), or Kafka can buffer events so your system doesn’t get overwhelmed. This ensures that even if many events happen at once, your pipeline can process them smoothly and reliably. For cloud migration, queuing events help you avoid errors and handle asynchronous tasks, keeping everything running in a controlled and orderly way.

c. Step Functions / Durable Functions for orchestration

- You can use Step Functions (AWS) or Durable Functions (Azure) to control the workflow of your event-driven pipeline. These tools help you define what happens next after an event—like waiting for one step to finish before starting another. For example, after migrating data, your system can automatically update a firewall or send a report. Using workflow orchestration makes your cloud migration pipeline organized, automated, and easy to manage from start to finish.

8.1 Benefits

a. Scales automatically

- When you use serverless functions and queues, your system can scale automatically. This means if there are lots of events, it will handle more of them without you doing anything. If fewer events happen, it will scale down. You don’t have to worry about adding or removing servers. This makes your cloud migration more efficient and ensures your system can handle both small and large workloads without breaking or slowing down.

b. Cost-effective (pay-per-use)

- With serverless tools, you only pay when your code actually runs—this is called pay-per-use. You don’t have to pay for servers sitting idle. If there are no events, you pay nothing. This makes your cloud migration very cost-effective, especially when handling large migrations or unpredictable workloads. You save money and only spend when your system is actively doing work. It’s a great way to control cloud costs while staying flexible.

c. Stateless and fast

- Serverless functions are stateless, which means they don’t remember anything from previous runs. They just take the event, run the code, and finish fast. This makes them great for event-driven cloud migration—they handle each task quickly and independently. Because they are fast, your pipeline reacts to changes in real time. Using stateless and fast functions helps your migration stay responsive, reliable, and able to handle lots of small tasks efficiently.

9. Real-World Use Case: Migrating Multi-Tier Applications

9.1 The Stack

a. Web App + API Layer

- You might be migrating a web app along with an API layer that connects to other services. The web app handles what users see, while the API layer handles data and logic. During cloud migration, you use event-driven automation to move these pieces with minimal downtime. Your pipeline can update DNS, deploy new versions, and test connections—all without you needing to do everything manually.

b. Database (PostgreSQL)

- Your app likely uses a PostgreSQL database to store important data. Migrating this requires extra care—you want no data loss and minimal downtime. With an event-driven pipeline, you can automate database replication, monitor for sync completion, and switch over once ready. You set up triggers to handle every step, making your database migration smooth and reliable, so your users always get the most up-to-date data.

c. File Storage

- Many apps use file storage for things like images, videos, and documents. Migrating this storage can be tricky if files change during migration. You can automate the process using event-driven triggers—copy existing files, watch for new changes, and sync them automatically. This ensures your file storage is always updated, and no files get lost. You don’t need to manage this by hand; your pipeline does it for you.

d. CI/CD Pipelines

- You use CI/CD pipelines (Continuous Integration/Continuous Deployment) to build, test, and deploy your code. During migration, these pipelines need to point to the new cloud environment. An event-driven setup helps automate this—when new infrastructure is ready, your CI/CD pipeline can update itself, run tests, and deploy to the new cloud. This keeps your app updates flowing smoothly even while you’re migrating, so your users always get the latest features.

9.2 Step-by-Step Reactive Migration

a. Git push triggers the IaC plan for the target cloud

- When you push code to your Git repo, it triggers your Infrastructure as Code (IaC) plan for the target cloud. This starts the process of building your new cloud environment. You don’t have to do this manually—your pipeline reacts to the Git push event. This keeps your migration process automated, consistent, and ensures that new infrastructure is created exactly as you’ve defined in your code.

b. Terraform apply triggers: DNS pre-check

- When your Terraform apply runs, it can trigger a DNS pre-check. This checks if your domain names are ready to point to your new cloud environment. It helps prevent downtime or errors by making sure your DNS settings won’t break when you switch over. This step is automated in your pipeline, so your cloud migration can move forward smoothly without surprises when the new infrastructure goes live.

c. Terraform apply triggers: Database replication job

- Your Terraform apply can also trigger a database replication job. This automatically starts copying your database—like PostgreSQL—to your new cloud environment. The data stays in sync while you’re migrating. You don’t have to manually copy the data. This ensures your cloud migration is safe and that no information is lost during the move. It also helps your database be ready to serve the app in the new cloud when needed.

d. Once replication is complete → event triggers app container migration

- When your database replication is done, an event triggers the next step: migrating your app containers (which run your web apps and services). This ensures your apps move only when the data is ready. Your pipeline controls this automatically, keeping the migration in the correct order. This helps you avoid app errors and ensures a smooth cutover to the new cloud environment when the app is fully migrated and ready to go.

e. Storage sync completion → triggers route update and monitoring integration

- Once your storage sync (moving files to the new cloud) is finished, this event triggers two tasks: a route update (so your app traffic goes to the new cloud) and setting up monitoring. Monitoring helps you track the health of your new system. This is done automatically to avoid downtime and ensure your cloud migration is both complete and well-monitored, giving you confidence that everything works in the new environment.

f. Post-migration tests fire → results determine rollback or commit

- After your migration steps are done, your system runs post-migration tests. These tests check if your app and data are working correctly in the new cloud. If the tests pass, your pipeline commits the migration (finalizes it). If they fail, your pipeline can rollback to the old setup automatically. This keeps your cloud migration safe, reliable, and ensures that only a fully working system goes live for your users.

10. Benefits of Reactive Cloud Migration Pipelines

10.1 Speed: Real-time actions reduce delays

- When you use a reactive cloud migration pipeline, your system takes real-time actions based on events. This means tasks happen immediately, not on a set schedule. You don’t have to wait for batch jobs or manual triggers. This greatly improves speed, letting you migrate apps and data much faster. The pipeline reacts the moment something is ready, keeping your entire migration process quick and efficient.

10.2 Resilience: Self-healing and failover strategies

- Your reactive pipeline can include self-healing and failover steps. If something goes wrong, the system automatically retries the task or switches to a backup path (failover). You don’t have to fix issues manually. This makes your migration process more resilient, meaning it can recover from problems and keep going. You’ll avoid long downtimes and keep your cloud migration stable and reliable, even when challenges pop up.

10.3 Auditability: Logs all events and actions

- Your reactive pipeline automatically logs every event and action that happens during the migration. This gives you full auditability—you can always check what was done, when, and by which part of the system. This is helpful for security, compliance, and troubleshooting. If something goes wrong, you can easily trace the cause. Having this complete record keeps your migration process transparent and trustworthy.

10.4 Consistency: IaC ensures identical environments

- Using Infrastructure as Code (IaC) in your pipeline helps you create identical environments every time. No more manual errors or differences between test and production setups. Your reactive pipeline can automatically apply IaC changes and verify that everything matches your desired state. This keeps your migration consistent—you know that what works in testing will work in production too. It makes your cloud migration process reliable and repeatable.

10.5 Scalability: Works across multiple services and teams

- A reactive cloud migration pipeline is highly scalable. It can handle many services and support large teams working together. You don’t have to coordinate everything manually. As more apps or data are added, the pipeline automatically manages them using event-driven automation. This helps your migration process grow with your needs—whether you’re moving a single app or an entire company’s infrastructure to the cloud. It stays flexible, organized, and powerful.

11. Challenges and How to Overcome Them

11.1 Event overload → Use filters and prioritization rules

- When too many events happen at once, your system can get overwhelmed. You should set up filters and prioritization rules to control which events your pipeline reacts to. This helps you focus on the most important tasks first and avoid wasting resources. With the right filters in place, your cloud migration stays efficient, even when dealing with high volumes of events.

11.2 Debugging complexity → Implement centralized logging and tracing

- Debugging a large pipeline can be hard if you don’t know where things went wrong. You should set up centralized logging and tracing so all events and actions are recorded in one place. This makes it easy to see what happened and when. With centralized logs, you can quickly find problems and fix them, keeping your cloud migration smooth and error-free.

11.3 Security → Implement scoped roles and API token rotation

- Keeping your system secure is critical. You should use scoped roles—only give each part of the pipeline the permissions it really needs. Also, rotate your API tokens regularly so old tokens can’t be misused. This reduces the risk of a security breach. Good security practices protect your data, your infrastructure, and the success of your cloud migration.

11.4 Race conditions → Use queues and throttling logic

- Sometimes events happen too fast or out of order, causing race conditions. This can break your pipeline. To prevent this, use queues to control the flow of events and throttling logic to slow things down when needed. This ensures events are processed in the right order and at the right speed. Managing event flow keeps your cloud migration stable and prevents errors.

11.5 Tool sprawl → Standardize tech stack and documentation

- If you use too many tools, your system becomes hard to manage—this is called tool sprawl. You should standardize your tech stack and write clear documentation. Pick the tools that best fit your needs and stick with them. Good documentation helps your team understand the system and work together more easily. A simple, well-documented pipeline makes your cloud migration easier to maintain and scale.

| Challenge | Mitigation |

|---|---|

| Event overload | Use filters and prioritization rules |

| Debugging complexity | Implement centralized logging and tracing |

| Security | Implement scoped roles and API token rotation |

| Race conditions | Use queues and throttling logic |

| Tool sprawl | Standardize tech stack and documentation |

12. Tools for Building Event-Driven Pipelines

12.1 Event Buses

a. AWS EventBridge

- You can use AWS EventBridge to manage event-driven workflows. It lets you easily connect different AWS services and trigger actions when something happens, like a file upload or VM shutdown. You just set rules to match events and decide what actions should follow. With EventBridge, your cloud migration pipeline becomes smarter, reacting in real time to important changes across your AWS environment.

b. Azure Event Grid

- Azure Event Grid helps you build event-based pipelines in Microsoft Azure. It delivers real-time notifications between services. For example, when your database migration finishes, Event Grid can trigger the next step automatically. You can easily route events to functions, workflows, or messaging services. Using Azure Event Grid makes your cloud migration more automated, scalable, and responsive, reducing delays and manual work.

c. GCP Eventarc

- With Google Cloud Eventarc, you can route events between Google Cloud services and custom apps. It helps you build event-driven systems without writing lots of glue code. You can capture events like a Cloud Storage change or database update, then trigger your migration pipeline steps. Eventarc makes it easy to create flexible, reactive pipelines that respond to changes as they happen, making your cloud migration fast and efficient.

12.2 IaC

a. Terraform

- You can use Terraform to write your cloud infrastructure as code. This means you describe what servers, databases, and networks you need in simple text files. Terraform then automatically creates them for you. During cloud migration, Terraform helps you keep environments consistent across old and new clouds. If something changes, you just update the code—Terraform handles the rest. It makes your migrations repeatable and reliable.

b. Pulumi

- Pulumi also lets you define cloud infrastructure as code, but you can use real programming languages like Python, JavaScript, or Go. This gives you more flexibility when building your migration pipeline. You can add logic, loops, and custom workflows right into your code. During cloud migration, Pulumi helps you automate complex setups and ensures your infrastructure behaves exactly how you want it—quickly and accurately.

c. AWS CDK

- The AWS Cloud Development Kit (CDK) is a tool for defining AWS infrastructure using popular programming languages like TypeScript or Python. You write your infrastructure as code, and the CDK turns it into AWS CloudFormation templates. This makes it easy to manage complex cloud setups. During your cloud migration, AWS CDK helps you create, update, and manage AWS resources automatically, ensuring your pipeline is efficient, scalable, and easy to maintain.

12.3 Serverless Functions

a. AWS Lambda

- With AWS Lambda, you can run your code without managing any servers. You just write a function, and it runs automatically when an event happens—like a file upload or database change. This helps your cloud migration pipeline become more automated and cost-efficient, since you only pay for what you use. AWS Lambda is great for tasks like copying data, setting up resources, or sending notifications.

b. Google Cloud Functions

- Google Cloud Functions let you run your code as a response to events in Google Cloud, like a new file in Cloud Storage or a Pub/Sub message. You don’t worry about infrastructure—it scales automatically. You can use it in your cloud migration to move data, update configurations, or connect services in real time. It’s a simple way to build smart, reactive automation steps.

c. Azure Functions

- Azure Functions help you build serverless automation in the Microsoft Azure cloud. When an event happens—like a database update or a webhook—you can trigger a function to perform the next step in your pipeline. You don’t need to manage servers, and it scales with your workload. Azure Functions are perfect for real-time tasks in your cloud migration, like provisioning resources or syncing files.

12.4 Orchestration

a. AWS Step Functions

- With AWS Step Functions, you can control how your automation steps run in the cloud. You design a workflow that connects tasks like migrating files, configuring resources, or sending alerts. It waits for each task to succeed before moving to the next. This gives your cloud migration a clear and organized structure, so everything happens in the right order, with built-in error handling and retry logic.

b. Argo Workflows

- Argo Workflows is a tool you use on Kubernetes to manage complex jobs step-by-step. You define your workflow as code, and it runs each task one after the other—or in parallel. During a cloud migration, Argo helps you control things like data movement or app deployment. It’s scalable, lightweight, and fits perfectly with event-driven pipelines, especially if you’re using containers and want fine control.

c. Temporal.io

- Temporal.io helps you build durable workflows that can pause, retry, and recover even after failures. It’s great for cloud migrations where you have long-running processes, like setting up a whole server or migrating a big database. You write the logic in normal code, and Temporal handles the orchestration, state, and retries. It helps you keep your pipeline stable, fault-tolerant, and easy to manage without writing complex scripts.

13. Security and Compliance Considerations

13.1 IAM Policies

- You use IAM policies to control who can access what in the cloud. By using the least privilege rule, you give each person or service only the minimum access they really need. This helps protect your cloud migration pipeline from mistakes or attacks. If someone doesn’t need to edit databases, don’t let them. Strong access control makes your system safer and more secure.

13.2 API Keys

- When you use API keys to connect different tools and services, make sure you rotate them regularly so they don’t get misused. Also, you should store them in a secrets manager like AWS Secrets Manager. That way, your keys are encrypted and kept safe from hackers. Treat these keys like passwords—never hard-code them in your scripts. Securing API keys is a must for safe automation.

13.3 Logging

- By turning on detailed logging (like AWS CloudTrail or Azure Activity Logs), you keep track of everything that happens during your cloud migration. Logs show who did what, when, and where. If something goes wrong, these logs help you debug and find the root cause. Logging also helps with audits, proving you followed security rules. It’s like having a security camera for your cloud actions.

13.4 Compliance Checks

- When you’re working in the cloud, you often have to follow rules set by your company or government. Tools like HashiCorp Sentinel or Open Policy Agent (OPA) let you set compliance rules in code. You can use them to make sure resources follow guidelines, like not opening public ports or using encryption. These checks help keep your cloud work legal, safe, and policy-compliant.

13.5 Encryption

- Always make sure your data is encrypted—both in transit (while moving) and at rest (when stored). Encryption scrambles your data so only people with the right key can read it. In a cloud migration, this keeps sensitive stuff like passwords or personal info safe from hackers. It’s one of the easiest and most powerful ways to protect your data during and after the move.

14. Best Practices for Implementation

14.1 Start Small

- When you begin your cloud migration, it’s smarter to start small. You can pick just one part—like a database or storage bucket—to move first. This helps you learn the process, fix issues early, and build confidence. Once you’re sure it works well, you can move other parts too. Starting small reduces risk and makes your migration journey easier to handle.

14.2 Use Templates

- You should use IaC templates to set up cloud resources. Templates give you a standard way to build things like networks, databases, or servers. This makes your work more repeatable and saves time. You don’t have to write everything from scratch each time. Plus, templates reduce errors because they follow a tested pattern. With templates, your setup becomes faster, safer, and more consistent.

14.3 CI/CD Integration

- When you connect your IaC code to CI/CD pipelines, every code change can automatically trigger the next step—like testing or deployment. For example, when you push code to Git, your CI/CD tool can check it and update the cloud. This makes your workflow fast, automated, and less manual. It’s how professional teams handle cloud changes safely and efficiently.

14.4 Fail Fast

- It’s smart to set your system to fail fast. That means if something goes wrong during migration, the process should stop right away and roll back to the last safe state. This prevents bigger problems later. You can use exit strategies and rollback plans to stay in control. Failing fast helps you recover quickly and keep your migration safe and under control.

14.5 Test Events

- Before you run things in real life, you should test your events in a sandbox. A sandbox is like a practice area where mistakes don’t hurt. You can simulate things like database changes or VM shutdowns and see how your system reacts. This helps you fix bugs early. Testing events make sure everything works correctly before you go live in production.

15. Future Trends in Cloud Migration Automation

15.1 AI-Driven Pipelines

- In the future, you’ll see AI-driven pipelines that use machine learning to predict what should happen next during migration. The system can learn from past patterns and suggest or even take actions automatically. This means less guesswork and smarter decisions. AI helps you save time, avoid mistakes, and handle complex migrations with better speed and accuracy.

15.2 GitOps for IaC

- With GitOps, you use Git as the single source of truth for your cloud setup. Every time you push a change in your IaC code, it triggers automatic actions—like provisioning or updates. You won’t need to manually deploy things. This makes your migration faster, safer, and easier to track. You get full control over what changes and when, right from your Git repository.

15.3 Event Mesh

- An Event Mesh is a system that can send and receive events across multiple clouds—like AWS, Azure, and GCP—at the same time. It lets different services talk to each other, no matter where they are hosted. This makes your migrations more flexible and helps you build multi-cloud pipelines. It also improves real-time coordination between different platforms.

15.4 Composable Pipelines

- In the future, you’ll be able to build composable pipelines using building blocks like IaC tools, serverless functions, and APIs. You can mix and match these tools to create the exact pipeline you need. This gives you flexibility and lets you scale or change your setup easily. Think of it like using LEGO blocks to design your cloud migration your way.

15.5 Self-Healing Migrations

- Imagine if your system could fix itself during migration. That’s what self-healing migrations are. If something goes wrong—like a server crash—the system can detect it and recover automatically. It might restart a service, recreate a resource, or alert you. This makes your migrations more reliable and resilient, with less need for you to fix problems manually.

Conclusion

- In the rapidly evolving world of cloud technology, your greatest advantage lies in embracing event-driven automation. This approach is not merely a shift in tools—it is a shift in mindset. By combining powerful APIs with Infrastructure as Code, you create a migration pipeline that is not only reactive and resilient but also secure, scalable, and intelligently automated. You no longer need to rely on outdated, manual processes. Instead, you respond in real time, reduce downtime, and achieve unmatched operational agility.

- Whether you are orchestrating a full-scale cloud exit or refining your multi-cloud strategy, event-driven pipelines allow you to stay ahead—technically and strategically. You aren’t just moving workloads; you are redefining how modern infrastructure should be managed. With the right architecture, you lead your team into a future where efficiency, reliability, and innovation are at the core of every migration.